Today, more than ever, data protection is one of the major challenges facing companies. Pressure in this area is mounting: increasing legislation (such as the GDPR), new requirements from regulators, rising cyber threats, the challenge of user awareness, and more.

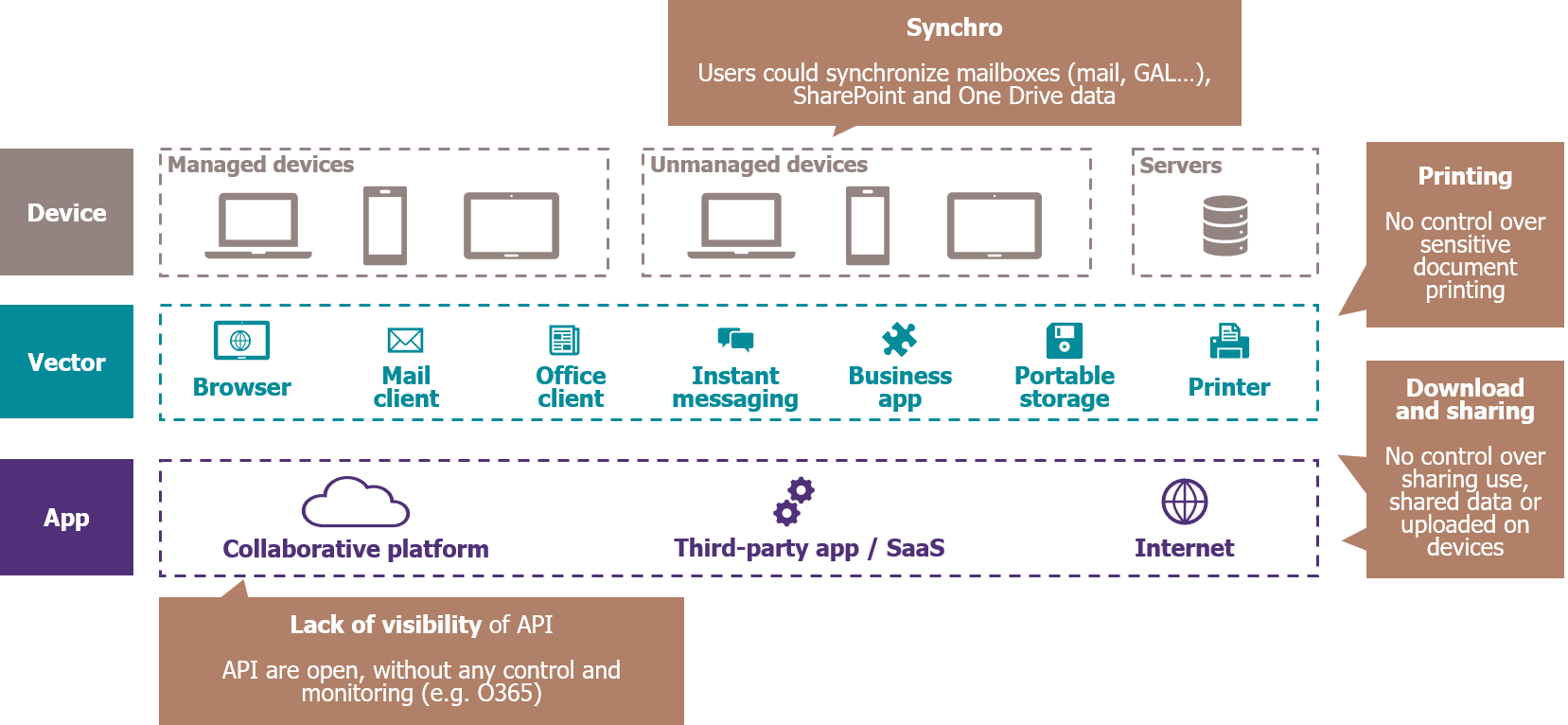

Meanwhile, the ecosystem within which data develops is becoming continually more complex. Indeed, information systems, which are in the full throes of transformation, are opening up to the outside world, becoming interconnected with numerous public cloud services, and creating escape routes for the company’s data.

A diversity of events can result in a data leak: employee negligence, internal fraud, third-party hacking etc. and the routes out are just as varied: email, Shadow IT, USB sticks, printers, etc. When an incident occurs, the consequences can be significant. The media take pleasure in persistently highlighting cases of hacking that have resulted in data leakage from major companies, something that permanently damages corporate reputations. The associated financial losses can also be significant, compounded by regulatory penalties and lost confidence on the part of customers and partners.

Today’s IS: a complex ecosystem that can open many doors to data leaks

DLP, an under-used – but eminently feasible – approach

The major challenge that data leaks represent is not, however, insurmountable. Some companies, including banks, have taken the lead in this area, compared with other sectors, in deploying tools to avoid data leaks that come under the heading of Data Leak Prevention (or Data Loss Protection—DLP). These tools enable them to track sensitive data and apply rules that control data flows, in line with defined policies. These rules can be applied at terminal level (workstations, servers, etc.), application level (Office 365, etc.) or network level (proxies, etc.).

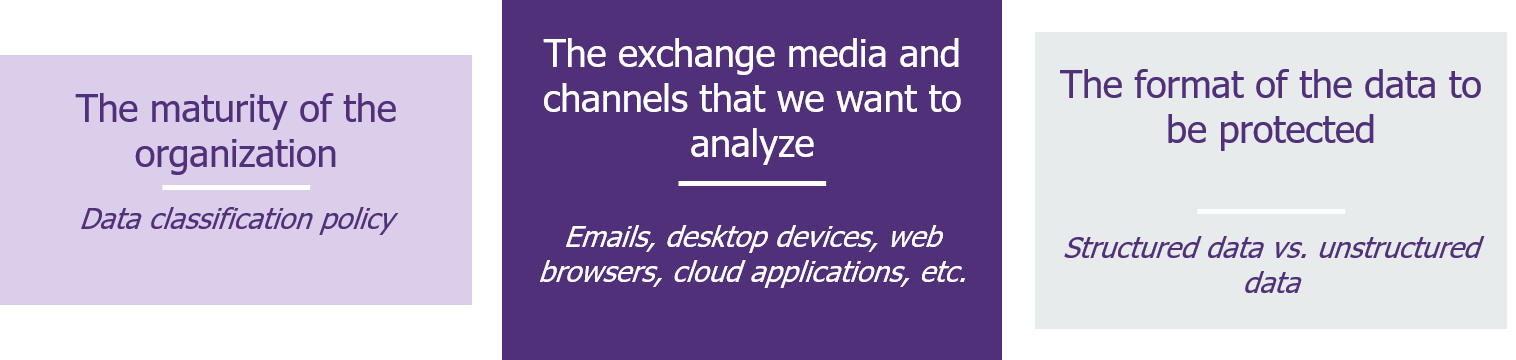

Implementing such solutions, however, requires a rigorously-designed project involving both the Information Security Department and the company’s business functions. Three main factors can be used to reduce the complexity involved in this approach:

The issues that need to be addressed, and the corresponding technical solutions, in such a project, will depend on corporate objectives aimed at mitigating the risk of data leakage, as well as the level of current practices and classification methods.

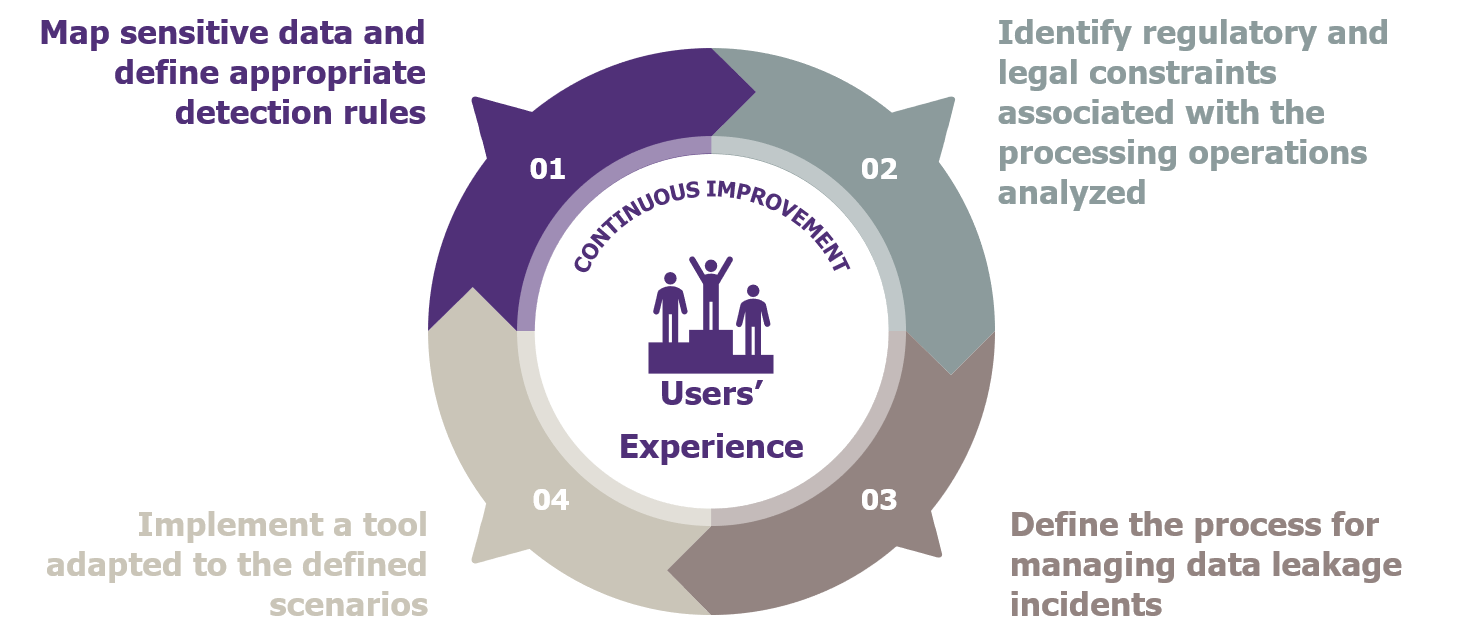

It’s also imperative, when implementing DLP solutions, to preserve the user experience: users should not expect to see their activities impacted by new protection mechanisms. Therefore, security objectives must take into account the needs of the business, which may require sensitive information to be exchanged with the outside world.

The recipe for a successful DLP project

Firstly, selection of the DLP tool should be based on the objectives defined at the start of the project, in terms of the structure of the data to be protected and the channels of exchange to be analyzed.

Some market solutions are highly mature when it comes to detecting whether data is sensitive, regardless of the data structure or transmission channel. The detection of structured data is simpler because it’s easier to characterize (for example: a social security record, or credit card number, have a defined number of digits). For unstructured data (which comprises 80% of all data, according to Gartner), detection can be based on the analysis of the metadata introduced during classification.

Next, the project should be framed to define and formalize the four essential areas of a DLP project, which are the keys to success in deploying the solution:

Mapping sensitive data and defining the associated protection rules

Where a company has already mapped data and processing activities that are considered sensitive—as well as what it deems legitimate flows—this can serve as a basis for the development of the DLP policies and detailed protection rules during the project.

If such mapping has not been carried out, a DLP project cannot succeed without strong involvement from the business functions. The project team will need to connect with the relevant departments and activities, to identify the sensitive data and the associated processing activities. This initial analysis will enable the demarcation of legitimate processing, storage, and transmission channels, both internal and external, to be separated out. And doing it successfully will mean working closely with key contacts from the various departments who will need to be interviewed to gather the information needed.

Following this, the project team can create the DLP policies to cover scenarios that represent data leaks.

Feedback from major corporates, however, shows that a key success factor in such projects is knowing how to pick your fights; it’s unrealistic—at least at first—to try to implement all potential DLP policies. Implementing good coverage of the company’s most critical data will already demonstrate a satisfactory level of maturity compared with current norms.

The identification of the legal and regulatory requirements associated with the processes being analyzed

The regulations that apply to sensitive data, such as personal data (for example national information processing laws, the EU’s GDPR, etc.) impose specific limits on the extent that such data can be legitimately processed. Moreover, companies operating in an international context have to comply with local regulatory frameworks, of which each has its own particularities. This results in a diversity of rules to be followed concerning data processing.

When it comes to legal compliance, it’s important to take the advice of the company’s own legal and compliance departments, as well as the various international entities who can approve the analyses and protection rules to be applied to the data.

The main points to be addressed during this regulatory due diligence are the processing of personal data, the notification of users about the processing being carried out, the place that the processed data is stored, and the transfer channels used.

Defining the process for managing data leak incidents

The operational implementation of previously considered DLP scenarios then requires the project team to define the resources and processes that will be set in motion when a data leak is detected. These will, of course, need to be tailored to the company’s incident management processes:

- Who will receive the alerts related to potential data leaks (the SOC (if there is one), a dedicated team linked to a business function, etc.)?

- What resources are to be put in place during the investigation of an impacted area (for example, in the event of a highly sensitive area being affected, will an inquiry need to maintain a certain level confidentiality)?

- Depending on the level of criticality, which hierarchical and operational levels should be made aware?

Unlike technical security incidents, it may be important to integrate relevant business teams, or the security manager of the part of the business in question, into the process in order to define the criticality of a data leak and its scope. In cases involving structured data, criticality can be evaluated simply, using correspondence tables, but the thinking required is of a completely different nature when unstructured data is involved (for example, an email from a company manager or a document related to a confidential project).

Strong sponsorship will also be required to ensure that the objectives and methods implemented under DLP are approved by the various business functions, the HR department, and employee representatives.

Implementing a tool tailored to the scenarios defined

Along with the definition of the incident management process, the supervision model and choice of tools must also be fleshed out. In addition to being able to address the detection scenarios defined, the tool selected will need to comply with certain prerequisites specific to the company’s ecosystem, as well as with the results of the regulatory due diligence performed. The criteria for the choice of technical solution should include the ability to:

- Integrate it with SOC tools (SIEM, etc.), and ideally with other enterprise security solutions (proxy, encryption tools/DRM, etc.);

- Tailor it to the business environment (collaborative platforms, file servers, etc.);

- Take into account the diversity of IT assets and the information system in case of deployment of add-on or application.

In addition, the effective implementation of a DLP strategy must, as an imperative, cover all channels of exchange and business use cases, in order not to leave any backdoors open (for example, installing a DLP tool at server, mail, and file levels, while leaving USB ports unprotected).

The four pillars of DLP

Implementing the solution doesn’t mark the end of the interest in data leak prevention: the DLP process must be part of a process of continuous improvement. The study of false positives and alerts should lead to regular reviews (at least every six months) to improve the detection scenarios in use. To do this, it’s good practice to anticipate, right from the beginning of the project, the associated resource requirement from Run teams, and to start with the basic scenarios.

It also makes sense to incorporate the DLP project’s objectives within a larger program to address data protection, including the review of file server rights and permissions, authentication with conditional access, and the integration of supervision with SOC and the encryption of files and applications.