The AI security market is entering a new phase

After several years of excitement and exploration, we are now witnessing a clear consolidation of the AI security solutions market. The AI security sector is entering a phase of maturity, as reflected in the evolution of our AI Security Solutions Radar. Since our previous publication (https://www.wavestone.com/fr/insight/radar-2024-des-solutions-de-securite-ia/), five major acquisitions have taken place:

- Cisco acquired Robust Intelligence in September 2024

- SAS acquired Hazy in November 2024

- H Company acquired Mithril Security at the end of 2024

- Nvidia acquired Gretel in March 2025

- Palo Alto announced its intention to acquire ProtectAI in April 2025

These motions reflect a clear desire by major IT players to secure their positions by absorbing key technology startups.

Simultaneously, our new mapping lists 94 solutions, compared to 88 in the October 2024 edition. Fifteen new solutions have entered the radar, while eight have been removed. These removals are mainly due to discontinued offerings or strategic repositioning: some startups failed to gain market traction, while others shifted focus to broader AI applications beyond cybersecurity.

Finally, a paradigm shift is underway: solutions are moving beyond a mere stacking of technical blocks and evolving into integrated defense architectures, designed to meet the long-term needs of large organizations. Interoperability, scalability, and alignment with the needs of large enterprises are becoming the new standards. AI cybersecurity is now asserting itself as a global strategy, no longer just a collection of ad hoc responses.

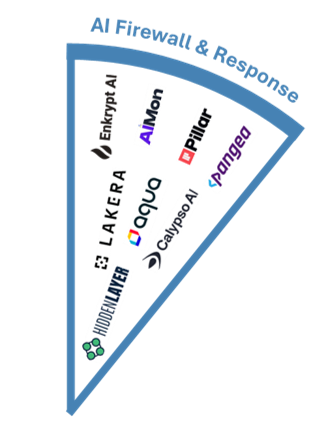

To reflect this evolution, we have updated our own mapping by creating a new category, AI Firewall & Response, which results from the merger of our Machine Learning Detection & Response and Secure Chat/LLM Firewall categories.

Best of breed or good enough? The integration dilemma

With the growing integration of AI security components into the offerings of major Cloud Providers (Microsoft Azure, AWS, Google Cloud), a strategic question arises:

Should we favor expert solutions or rely on the native capabilities of hyperscalers?

- Specialized solutions offer technical depth and targeted coverage, complementing existing security.

- Integrated components are easier to deploy, interoperable with existing infrastructure, and often sufficient for standard use cases.

This is not about choosing one over the other but about shedding light on the possibilities. Here is an overview of some security levers available through hyperscaler offerings.

Confidential Computing

This approach goes beyond securing data at rest or in transit: it aims to protect computations in progress, using secure enclaves. It ensures a high level of confidentiality throughout the lifecycle of AI models, sensitive data, or proprietary algorithms, by preventing any unauthorized access.

Filtering

Cloud Providers now integrate security filters to interact with AI more safely. The goal: detect or block undesirable or dangerous content. But these mechanisms go far beyond simple moderation: they play a key role in defending against adversarial attacks, such as prompt injections or jailbreaks, which aim to hijack model behavior.

Robustness Evaluation

This involves assessing how well an AI model withstands disruptions, errors, or targeted attacks. It covers:

- exposure to adversarial attacks,

- sensitivity to noisy data,

- stability over ambiguous prompts,

- resilience to extraction or manipulation attempts.

These tools offer a first automated assessment, useful before production deployment.

Agentic AI: a cross-cutting risk, a distributed security approach

Among the trends drawing increasing attention from cybersecurity experts, agentic AI is gaining ground. These systems, capable of making decisions, planning actions, and interacting with complex environments, actually combine two types of vulnerabilities:

- those of traditional IT systems,

- and those specific to AI models.

The result: an expanded attack area and potentially critical consequences. If misconfigured, an agent could access sensitive files, execute malicious code, or trigger unexpected side effects in a production environment.

An aggravating factor adds to this: the emergence of the Model Context Protocol (MCP), a standard currently being adopted that allows LLMs to interact in a standardized way with third-party tools and services (email, calendar, drive…). While it facilitates the rise of agents, it also introduces new attack vectors:

- Exposure or theft of authentication tokens,

- Lack of authentication mechanisms for tools,

- Possibility of prompt injection attacks in seemingly harmless content,

- Or even compromise of an MCP server granting access to all connected services.

Beyond technical vulnerabilities, the unpredictable behavior of agentic AI introduces a new layer of complexity. Because actions directly stem from AI model outputs, a misinterpretation or planning error can lead to major deviations from the original intent.

In this context, securing agentic AI does not fall under a single category. It requires cross-cutting coverage, mobilizing all components of our radar: robustness evaluation, monitoring, data protection, explainability, filtering, and risk management.

And this is precisely what we’re seeing in the market: the first responses to agentic AI security do not come from new players, but from additional features integrated into existing solutions. An emerging issue, then, but one already being addressed.

Our recommendations: which AI security components should be prioritized?

Given the evolution of threats, the growing complexity of AI systems (especially agents), and the diversity of available solutions, we recommend focusing efforts on three major categories of security, which complement each other.

AI Firewall & Response: continuous monitoring to prevent drifts

Monitoring AI systems has become essential. Indeed, an AI can evolve unpredictably, degrade over time, or begin generating problematic responses without immediate detection. This is especially critical in the case of agentic AI, whose behavior can have a direct operational impact if left unchecked.

In the face of this volatility, it is crucial to detect weak signals in real time (prompt injection attempts, behavioral drift, emerging biases, etc.). That’s why it’s preferable to rely on expert solutions dedicated to detection and response, which offer specific analyses and alert mechanisms tailored to these threats.

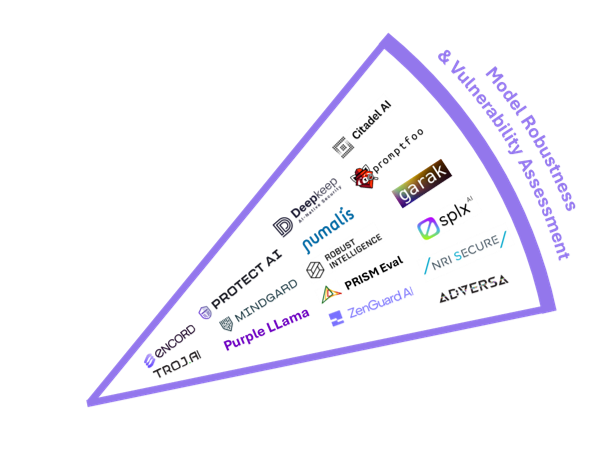

Model Robustness & Vulnerability Assessment: test to prevent

Before deploying a model to production, it is crucial to assess its robustness and resistance to attacks. This involves classic model testing, but also more offensive approaches such as AI Red Teaming, which consists of simulating real attacks to identify vulnerabilities that could be exploited by an attacker.

Again, the stakes are higher in the case of agentic AI: the consequences of unanticipated behavior can be severe, both in terms of security and compliance.

Specialized solutions offer significant value by enabling automated testing, maintaining awareness of emerging vulnerabilities, and supporting evidence collection for regulatory compliance (for example, in preparation for the AI Act). Given the high cost and time required to develop these capabilities in-house, outsourcing via specialized tools is often more efficient.

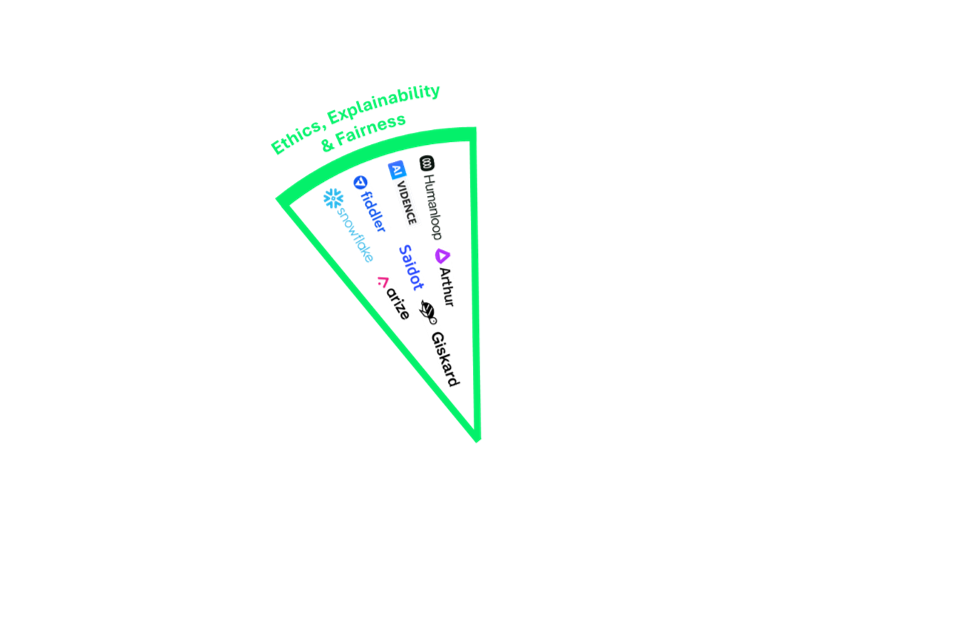

Ethics, Explainability & Fairness: preventing bias and algorithmic drift

Finally, the dimensions of ethics, transparency, and non-discrimination must be integrated from the design phase of AI systems. This involves regularly testing models to identify unintended biases or decisions that are difficult to explain.

Once again, agentic AI presents additional challenges: agents make decisions autonomously, in changing environments, with reasoning that is sometimes opaque. Understanding why an agent acted in a certain way then becomes crucial to prevent errors or injustices.

Specialized tools make it possible to audit models, measure their fairness and explainability, and align systems with recognized ethical frameworks. These solutions also offer updated testing frameworks, which are difficult to maintain internally, and thus help ensure AI that is both high-performing and responsible.

Conclusion: Building a Security Strategy for Enterprise AI

As artificial intelligence becomes deeply embedded in enterprise operations, securing AI systems is no longer optional—it is a strategic imperative. The rapid evolution of threats, the rise of agentic AI, and the growing complexity of models demand a shift from reactive measures to proactive, integrated security strategies.

Organizations must move beyond fragmented approaches and adopt a holistic framework that combines robustness testing, continuous monitoring, and ethical safeguards. The emergence of integrated defense architectures and the convergence of AI security categories signal a maturing market—one that is ready to support enterprise-grade deployments.

The challenge is clear: identify the right mix of specialized tools and native cloud capabilities, prioritize transversal coverage, and ensure that AI systems remain trustworthy, resilient, and aligned with business objectives.

We thank Anthony APRUZZESE for his valuable contribution to the writing of this article.