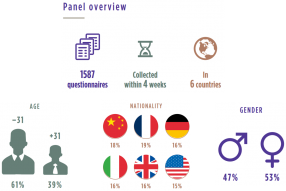

The results presented in this paper form a synthesis of the survey as a whole. Detailed results and analysis are available on our website. The results of this survey should not be viewed as scientific evidence. Rather, it is representative of global and national trends in the perception of privacy by individuals. The survey considers the responses of 1,587 participants, between July and August 2016, across 6 countries.

A consistent vision on an international scale

The countries selected for the survey, namely France, Italy, Germany, China, the United States and the United Kingdom, were selected on the basis of their socio-economic environments and the diversity of regulatory frameworks concerning privacy protection. These elements can influence the perception and opinion of citizens regarding the protection of personal data. However, despite initial contextual differences, we observed through collected responses that the theme of privacy is perceived in a relatively similar way across the surveyed countries.

Among the majority of respondents were younger generations, often perceived as “digital” citizens and more intrigued by the subject of privacy in a digital world.

Indeed, there are differences and particularities: notably in how German respondents place particular importance ahead of their counterparts on the definition of privacy relating to personal freedom. Responses from the United States demonstrate less confidence in public institutions. Generally, however, there is greater global awareness among individuals about privacy and personal data topics. This can be explained by the borderless nature of data and the digital world, with the digital citizen expecting his or her privacy to be respected regardless of borders. This observation reinforces the importance of respecting privacy in digital projects, regardless of the country and population in question.

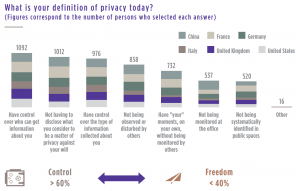

From freedom to control: evolution of the meaning of “privacy”

Privacy is traditionally seen as the possibility for an individual to retain some form of anonymity in his or her activities and to have the ability to isolate oneself in order to best protect his or her interests. It is intimately linked to the notion of freedom. However, analysis of the survey results shows that this notion tends to disappear in favour of the control of information. We have proposed to our respondents to select one or more definitions that relate to either notion.

The most frequently selected responses relate to control. This pattern is confirmed by observing the intermediate proposals. For example, “having control over the type of information collected about you” is a more widely selected response (more than half) than “having moments alone, without being monitored by others”, relating to freedom.

It is also important to provide customers and employees with assurance that they have control over their data. This is possible by providing individuals with simple and autonomous means of access.

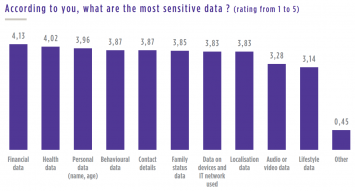

All personal data are viewed as sensitive in the eyes of citizens

When questioned about the level of sensitivity, the panel showed slight differences in their responses. Citizens considered most of the proposed types of data as sensitive. They did not perceive that leakage of certain data types could have serious or even irreversible consequences (e.g. health data), in contrast to other data types (e.g. financial data), for which most countries have already implemented regulatory frameworks which protect individuals (for example, rapid reimbursement in the event of fraud). This demonstrates that, regardless of the type of personal data handled by a project, special attention must be given at least to the communication of protection levels.

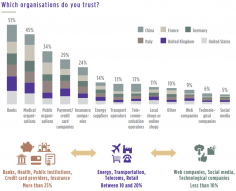

Trust varies greatly from one sector of activity to another

We asked respondents to indicate which type(s) of organisation(s) they trusted the most with regard to using their personal data for previously authorised use. We can differentiate between three main groups of actors.

- Firstly, the actors grouped under the category of “institutions” command the highest level of trust among respondents. / This includes public institutions, semi-public institutions or entities from the traditional economy with which individuals have historically shared a relationship of trust. This is particularly the case given how such institutions have processed sensitive data throughout their history (medical data, etc.). We also find significant differences within this category, with more than half of respondents claiming to trust banks with the processing of their data. Image and reputation are therefore crucial for banks, which serve to meet customer expectations in the aim of retaining their position as the number one trusted partner.

- Secondly, an intermediate category encompasses the actors of daily life such as transport operators and energy suppliers. Such B2C actors carry out swift digital transformation and benefit from the existing relationship of trust.

- Thirdly and finally are actors in the digital economy, whether web giants or technology firms.

Mistrust towards such companies can be attributed to the amount of data they collect and use on individuals, as well as recent high-profile prosecution cases related to such use. However, this result reveals a paradox. Despite this evident lack of trust, individuals continue to frequently use the services provided by these actors, due in part to a lack of alternative, as well as the information entrusted seeming to be, often wrongly, harmless and insignificant in the eyes of the individual.

New technologies raising fears

The panel highlights four technologies most likely to put their privacy in danger, according to respondents. What do they all have in common? Making it possible to collect data without this activity being under the control of the persons concerned. This would, for certain individuals, equate to a form of surveillance. On the other hand, technologies which provide citizens with the ability to choose the data they share, such as connected objects or Cloud services storing private information, are considered less risky in terms of privacy and therefore do not feature as any of the four technologies.

Although not traditionally thought of as “sensitive”, data on individual behaviours and actions are now viewed as a significant stumbling block between customer expectations about the respect for privacy and the increasingly personalised customer relationship.

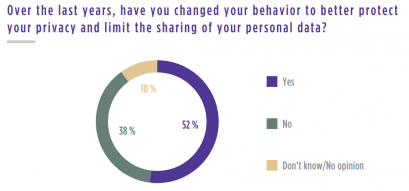

Citizens who take action to protect their digital privacy

More than half of respondents claimed that they had made certain changes to their online behaviour in order to better protect their data. This illustrates a heightened level of awareness by individuals concerning the protection of their privacy. It is worth analysing how the means individuals take to ensure such protection. Our respondents described the measures they took, divided into two categories:

- Measures to limit the amount/ type of data provided: provision of inaccurate/incomplete information when creating an account, such as the use of a nickname or discarding non-mandatory fields or the use of anonymous accounts…

- Measures to improve the security of the data provided: increasing the level of security of online accounts such as strengthening passwords, changing passwords regularly, checking access rights and being more attentive when sharing personal information over the Internet…

- In addition to such measures, we find more extreme solutions. This ranges from the complete closure of accounts on social networks, exclusive use of trusted and tested sites or technologies, to deleting history and cookies with every use of search engines.

While these individual initiatives can contribute to increasing the protection of privacy, they may conflict with new uses and innovation promoted by organisations, thus limiting or even preventing the personalisation of the customer relationship.

The survey methodology

The survey was carried out among a 1587 respondents’ sample with people from 6 different countries: Germany, China, the United States, France, Italy and the United Kingdom. Answers have been analyzed by two Wavestone’s offices: Paris and Luxembourg. The respondents’ sample has been provided by a tierce organization (SSIS). The Wavestone research department is familiar with this structure because they used to work together on surveys on behalf of the European Commission. Before the emailing campaign, quizzes have been conceptualized and translated by Wavestone. The sample has been defined in order to ensure its representativeness. The panel needed to be representative of the targeted population without any gender and socio-professional category discrimination. Besides, the two selection criteria were that people need to be adults and they must have an Internet access. The survey was conducted from July to August 2016 and analysed from September to December of the same year. The final version has been finally published at the beginning of 2017. All the data from this survey have been anonymized. The data collection has been made for statistical purposes only.