We have recently opened the contributions to this blog to start-ups accelerated by our Shake’Up project. Hackuity rethinks vulnerability management with a platform that collects, standardizes and orchestrates automated and manual security assessment practices and enriches them with Cyber Threat Intelligence data sources, technical context elements and business impacts. Hackuity enables you to leverage your existing vulnerability detection arsenal, to prioritize the most important vulnerabilities, to save time on low-value tasks and reduce remediation costs, to gain access to a comprehensive and continuous view of the company’s security posture, and to meet compliance obligations.

After having seen in a first article the state of the threat and the current issues related to vulnerability management, we will see in this second article the new approaches to be considered to better manage vulnerabilities, in particular through the prioritization of vulnerability remediation proposed by Hackuity.

The advent of Risk-Based Vulnerability Management (RBVM)

Risk Based Vulnerability Management (RBVM) is an approach that treats each vulnerability according to the risk it represents for each company.

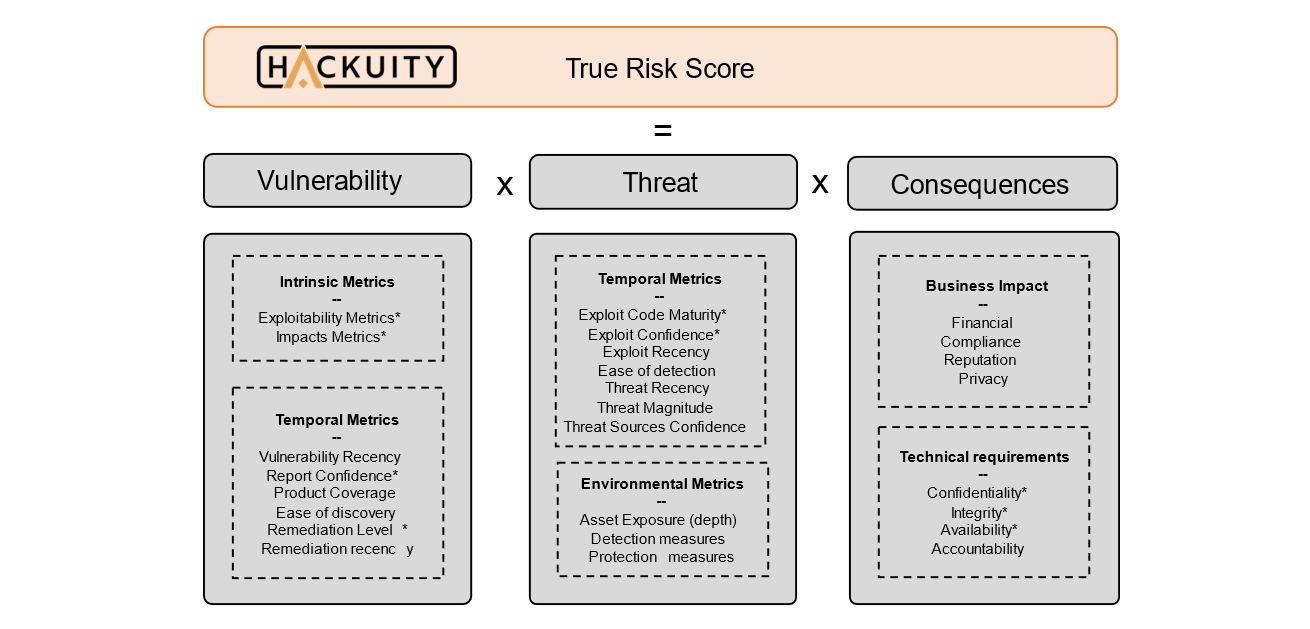

In this context, the classic formula for calculating a risk applies:

The first part of the formula, vulnerability × threat, can also be considered as a probability. This probability describes the chances that a given vulnerability will be discovered and used by a threat actor in the specific technical context of the organization.The last part of the formula describes the consequences, or impact, of a successful attack by a threat actor in the company’s business context.

This is in synthesis the approach adopted by CVSS, a standard developed by FIRST (Forum of Incident Response and Security Teams), initially to quantify the technical severity of a vulnerability. Through 3 metrics (basic, temporal, environmental), the full CVSS score (now in its version 3.1) is supposed to reflect the real risk of each vulnerability, in the context of each company.

Source: FIRST (https://www.first.org/cvss/specification-document)

Our purpose here is not to describe CVSS, so we assume that the reader is familiar with the concept. The CVSS score has many advantages, among the main ones:

- The only standard on the market available to quantify the criticality of a vulnerability,

- A detailed and transparent algorithm,

- A scoring widely adopted by the industry,

- Several world-wide reference databases available (in particular to qualify the criticality of CVE).

However, it has many limitations, the main ones of which can be listed here:

- Its low granularity: each of the metrics is composed of categorical values with predetermined values (e.g., low, medium, high) which limits its discrimination capabilities.

- Its vocation to unitarily qualify vulnerabilities: it is thus impossible to evaluate the criticality of a complete attack scenario with CVSS. For example, some cyber-attacks exploit several low vulnerabilities to compromise an entire perimeter. However, the CVSS assessment will only cover each of the vulnerabilities independently; it is necessary for the auditor to present a global scenario to highlight the overall risk, and they cannot rely solely on CVSS to do so since it was not designed to be aggregated.

- Its arbitrary nature: the weights in the algorithm sometimes seem to be composed of arbitrary figures making the interpretation of these values complex. In the end, there is sometimes a significant margin of error in the CVSS quantification of the same vulnerability by two professionals.

On the other hand, should it be reminded, the public CVSS scores, such as those referenced in the NVD, are only base scores. They represent the intrinsic criticality of a vulnerability, but do not reflect the risk that this vulnerability represents for the company. In other words, they answer the question “Is it dangerous?” but not “Is it dangerous for my company right now?”.

Effective vulnerability management must take into account not only the base score, but also temporal and environmental metrics. The FIRST provides the framework, but the NIST cannot compute the CVSS score for the enterprise, as it requires knowledge of the criticality of the assets, identification of controls in place, the exploitability of the vulnerability in this specific context, or the intensity of the actual and current threat.

In the field, however, we note that nearly 45% of the companies surveyed – of all sizes – only use the CVSS base score as the sole metric for quantifying the criticality of vulnerabilities.

Beyond the relevance of this approach, the use of this single metric does not solve the major problem of the industry, which remains the volume of vulnerabilities to be addressed.

Of the 123,454 vulnerabilities (CVE) identified as of 01/15/2020, more than 16K had a CVSS base score (V2.0) deemed critical (i.e., more than 13% of the total).

Beyond CVSS ?

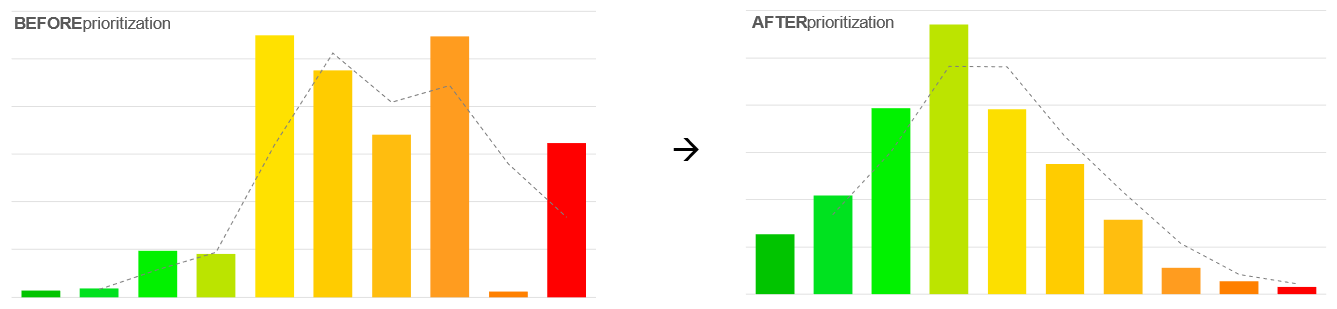

The objective of prioritization is therefore to reduce the stock of vulnerabilities by discriminating the most critical in order to allow the teams and means of remediation to focus on the vulnerabilities that matter the most.

On the other hand, there is no doubt that the daily flood of new vulnerabilities brought up by the detection arsenal can no longer be managed manually. It is totally unrealistic to manually examine, analyze and prioritize all identified vulnerabilities.

Automation should enable teams to work more efficiently, reducing repetitive and/or low value-added manual tasks and processes.

To meet these needs and respond to the limitations of CVSS, the RBVM players are introducing:

- New risk metrics (scores) – proprietary – that complete, overload or replace CVSS,

- Automation of analysis and measurement tasks, including correlation with threat sources (CTI) to continuously qualify the threat intensity associated with each vulnerability.

More generally, the RBVM approach takes into account numerous evaluation metrics to establish a score based on context and threat. There seems to be a consensus on 4 main categories of criteria:

1/ The vulnerability or the individual – intrinsic – characteristics of the vulnerability itself.

Through these criteria, the aim is to measure the severity of a vulnerability by taking into account metrics that are constant over time and regardless of the environment, such as the privileges required to exploit the vulnerability or its attack vector (remotely, on the same local network, with physical access, etc.).

For this category, the CVSS base score (generally taken in its version 2.0 to ensure anteriority) is a solid starting point for analyzing the intrinsic criticality of the vulnerability. This is the score used by most solutions on the market.

2/ The external threats that will be used to quantify the current intensity of the threat associated with each vulnerability.

The metrics used reflect characteristics that may change over time but not from one technical environment to another.

“Is the vulnerability associated with hot topics on discussion forums, the darknet and social networks? Does it have an exploitation mechanism been published or is it currently being exploited by a particularly virulent ransomware?”

The availability of an “exploit” associated with a vulnerability is, for example, an important factor taken up by most risk-based vulnerability management solutions. According to a Tenable Research study, 76% of vulnerabilities with a CVSS baseline score > 7 do not have an exploit available.

Source: (https://fr.tenable.com/research)

This means that companies that are focusing on fixing all their vulnerabilities with a “high” or “critical” risk according to CVSS would spend three thirds of their time filling in holes that ultimately represent little risk. For better operational efficiency, it is therefore appropriate to focus remediation efforts on vulnerabilities for which an exploit has already been released.

But this is far from being the only relevant criteria. Without known exploit, the age of the vulnerability can be taken into account to compute its probability of exploitation, using a statistical approach based on the occurrences of exploitation measured. Some initiatives such as EPSS (Exploit Prediction Scoring System[1] ) even try to predict the “weaponization” of vulnerabilities.

Like the age of the vulnerability, the age of the exploit is also a factor that will highly influence the probability of exploitation. For example, the CVE exploitation rate skyrockets as soon as an exploit is published, and then progressively decreases.

More generally, the threat intensity is an important metric in the prioritization algorithm. Beyond statistical approaches, it can be measured by monitoring CTI sources, social networks or various publications, such as quantifying the number of occurrences of these vulnerabilities in cybercriminal forum discussions. It will thus be possible to determine that a new or particularly active malware exploits a vulnerability and therefore to increase its criticality score.

Many other indicators can be integrated to refine the relevance of vulnerability prioritization. The Hackuity solution takes into account more than 10 criteria in addition to the CVSS metrics to compute its “True Risk Score”:

In addition to the relevance of the choice of these criteria and the algorithm itself, the type and quality of the CTI sources monitored to continuously feed these metrics represent an important issue.

Some of the sources used include the numerous open sources (OSINT) on vulnerabilities and threats (NIST-NVD, Exploit-db, Metasploit, Vuldb, PacketStorm, …), some of which are consolidated through open-source initiatives such as VIA4CVE (https://github.com/cve-search/VIA4CVE).

There are also a large number of private and commercial players offering CTI feeds with virous levels of specialization in vulnerability intelligence.

3/ The technical context or the unique characteristics of the environment in which the asset is located.

This category is used to measure the probability / difficulty to exploit a vulnerability in the specific context of each organization.

“Is the asset exposed on the Internet or hidden somewhere in the company’s datacenter? What are the technical measures (protection, detection) that make it more or less vulnerable to attacks?”

If some market actors just determine that an asset is exposed on the Internet based on its IP addressing scheme, others like Hackuity will seek to measure the depth of the attack trees needed to exploit the vulnerability in the company’s IS.

These characteristics are by definition specific to each environment. It is therefore necessary to have, take from, or determine such information, in particular by feeding the prioritization formula with contextual data linked to the assets. For example, the data may exist and therefore be extracted from internal repositories.

4/ The business criticality of the asset.

This involves measuring the consequences, or impact, of a successful attack by a threat player in the business context of the company.

“Is the asset impacted by the vulnerability critical to the organization in one way or another? Does it host sensitive or nominative information? What are the impacts for the company in terms of financial, reputation or compliance if the vulnerability is exploited?”

As much as for the technical context, these characteristics are specific to each environment. They may be manually entered or derived from risk analysis results such as Business Impact Analyses.

To conclude on RBVM, whatever the degree of automation brought by the Solution, it will only take its full strength with the contribution of contextual elements that the tool cannot guess (business impacts, technical environment of the assets, organization, processes, etc.).

Beyond RBVM: Vulnerability Prioritization Technologies (VPTs)

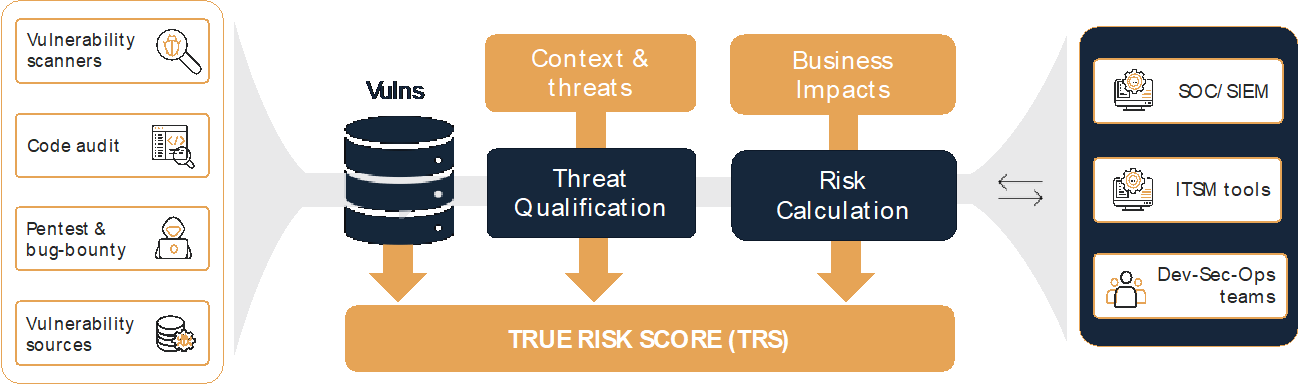

While the major market leaders in vulnerability detection have adopted a risk-based approach to Vulnerability Management, they have not addressed the main problem associated with the “best-of-breed” approach to detection: companies use multiple detection tools and practices to ensure complete and effective coverage of their technical perimeter.

Average number of detection tools by company size / Hackuity – Panel of 93 companies

As mentioned above, this necessary use to a heterogeneous arsenal promotes a fragmented and unconsolidated view of the situation, which limits the ability to scale and, with the growing volume of vulnerabilities, leads to an explosion of costs.

To address this problem, emerging market players named VPTs (Vulnerability Prioritization Technologies) by Gartner, such as Hackuity, agnostically exploit existing sources of vulnerability.

They collect and centralize vulnerabilities from any company’s detection arsenal: multiple practices (pentest, bug-bounty, red team, etc.), vulnerability detection solution providers (vulnerability scans, SAST, DAST, IAST, SCA, etc.) and vulnerability watch feeds. The main features of VPT solutions are described below.

Functional diagram of the Hackuity solution

A comprehensive view of the state of the stock of vulnerabilities

Automating the collection of vulnerabilities enables security teams to have, sometimes for the first time, a consolidated and centralized view of the company’s stock of vulnerabilities, regardless of the solutions or detection practices implemented.

A crucial operation – and one that is very rarely performed – is the conversion of proprietary formats into a normalized format. This allows clones of the same vulnerability, which have been identified by several sources, to de deduplicated (e.g. the same SQL injection identified during an intrusion test and during a vulnerability scan).

As such, Hackuity’s vulnerability’s meta-repository is a multilingual knowledge base that provides a unified and standardized description of all vulnerabilities, including corrective actions, patches, remediation costs, or exploitability, with no loss of information from the original source.

The establishment and enrichment of an inventory of assets

In the field, there are only rare exceptions of companies that have an inventory of their assets that is considered complete or at least reliable (CMDB, ITAM, …). This is an endemic problem in the practice and sometimes the main obstacle to the implementation of an efficient vulnerability management policy in companies. In order to solve this problem, some solutions integrate into their operations the dynamic and continuous establishment of the repository of the company’s assets inventory. This inventory is established by analyzing and correlating the technical data collected (e.g. the software stack installed on a server, its various aliases, etc.) and provides an asset database that is continuously kept up to date with data from multiple sources.

Asset criticality is also a key element in the vulnerability risk measurement process and accounts for nearly 50% in a prioritization approach. Without an accurate inventory of assets and an assessment of their criticality in the company’s business environment, it is impossible to accurately compute the real risk associated with each vulnerability. Some solutions, such as Hackuity, will compensate for the absence or non-completeness of risk analyses by automatically assessing the criticality of assets based on their technical and operational properties (types and families of tools installed, density of interconnections, hosted databases, etc.).

In the end, to have consolidated information about vulnerabilities or the company’s assets, you no longer need to master dozens of tools or formats: the cost and workload associated with managing disparate tools is significantly reduced.

The missing link between detection and remediation of vulnerabilities

Finally, the bidirectional link with the teams in charge of remediation or security supervision provides a collaborative approach in managing the stock of vulnerabilities.

Indeed, while automation has become a key lever for vulnerability management, the human factor remains at the heart of the process.

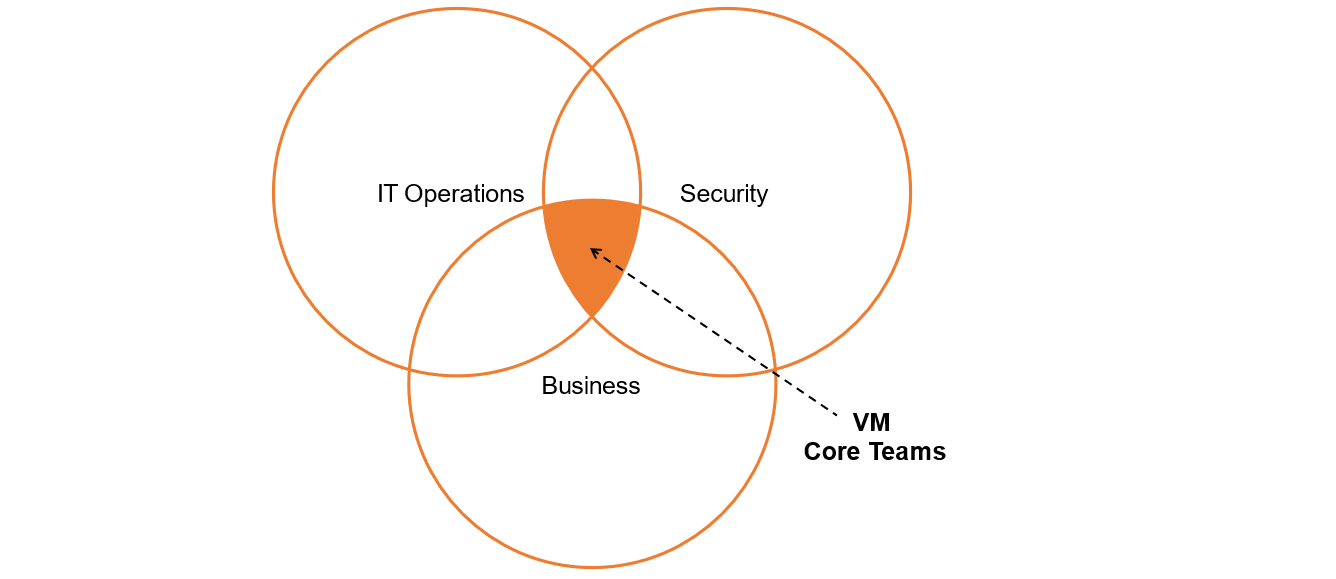

In most companies, Vulnerability Management involves 3 actors who must work together:

- The security teams in charge of operating the detection tools and managing remediation plans,

- The business managers who arbitrate or clarify the remediation plans in the light of business constraints,

- Operational staff in charge of deploying corrective measures (patch management, configuration, development, etc.).

The efficiency of the process is therefore not limited to the automation of vulnerability collection. In the downstream part of the process (remediation management), play-books can be used to mobilize the resources needed to implement corrective measures: identification of the person in charge of the task, automatic creation of incident tickets, generation of scripts for Infrastructure as Code solutions, etc.

Upstream, the CISO finally has, and often for the first time, a real-time perception of the progress of remediation plans.

The vulnerability management solution is then the orchestrator of the ecosystem of solutions aiming at detecting, qualifying, correcting and monitoring vulnerabilities affecting the company.

Designed as an open system, it also allows third party tools and processes (SIEM, GRC, Compliance, Forensics, …) to be fed with consolidated and structured data on vulnerabilities, assets and threats affecting the business.

Conclusion

As a true cornerstone of corporate cyber security, vulnerability management can finally be synonymous with a scalable, effective practice for which it is now possible to have factual indicators reflecting the efforts made by security teams and teams in charge of remediation.

Besides the direct impact on the company’s security posture, through a reduction in the vulnerability exploitation window, or even the mobilization of experts on high added-value tasks, the integration of a vulnerability management orchestration solution can also have indirect benefits, such as better understanding the information system thanks or even a tenfold increase in the commitment of the teams thanks to the quantification of the impact of their actions on the company’s security.