Containers represent an opportunity for rapid, flexible, and efficient application deployment.

In 2019, 84% of production infrastructures were already using containers[1]. As it is often the case, this massive adoption has taken place without the integration of Cybersecurity teams, sometimes out of ignorance of the technology, and sometimes out of a vision of simplicity and efficiency for development teams.

The need to secure containers is greater than ever, and it’s time for Cyber teams to understand the technology and define the right security measures.

We’ll start with a comparison between containers and virtual machines, then look back at the reasons for the emergence of containers. We’ll then look at how to secure them throughout their lifecycle, step by step.

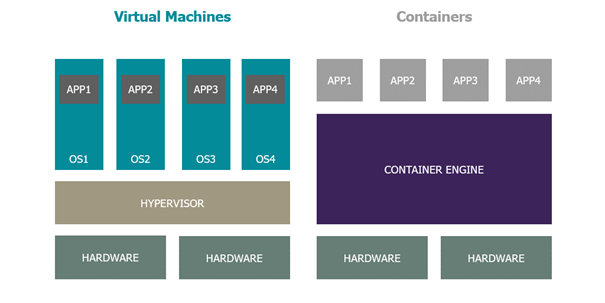

Virtual machine, container: what’s the difference?

But why choose a container? To understand this, we first need to look at the difference between a virtual machine and a container.

The main difference between a VM (Virtual Machine) and a container lies in the elements included in the virtualized space. A container contains only the applications and dependencies required to run it, whereas a VM will contain an operating system on which one or more applications will be installed. As a container has no operating system of its own, it relies on the one of the hosts on which it runs on. This distinction makes for greater lightness and complexity.

So why use containers at all?

Containers were not developed to enhance security, but rather for infrastructure purposes. The main advantages are:

– Consistency: containers can be launched on any machine and will operate in the same way.

– Economy: containers are faster and require fewer resources than VMs, so they cost less.

– Automation: it’s much easier to automate the deployment of a container than the creation of a virtual machine (Cloud technologies have come a long way in this respect).

These three advantages, combined with the popularization of the DevOps approach within companies, have led to an explosion in the use of containers. Without being side-lined, security has not been an objective in the design of containers. As a result, good security practices have been put in place as the technology has been developed and used.

Execution models

The advantages of containers are linked to a specific mode of operation based on very specific execution kinematics. Let’s take a look at container execution models.

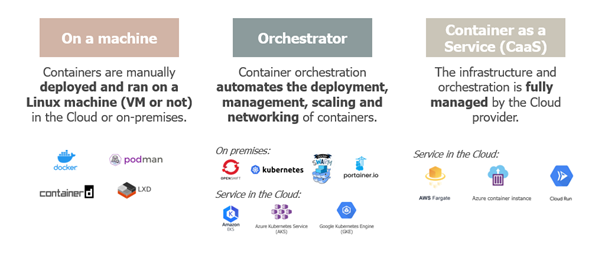

A container can be run on an on-premise or cloud-hosted machine. As explained above, a container contains only an application and its dependencies. It has no operating system, and thus relies on the host’s functionality. Consequently, a container requiring Linux functionality will need to run on a machine with a Linux operating system. Conversely, a container requiring Windows functionality will run on a Windows machine. However, virtualisation processes, such as Hyper-V for Windows, make it possible to overcome these constraints.

To run a container on a machine, you simply need to install container management software (a container runtime). Among container platforms, Docker, lxd and Containerd are the most widely used.

This makes it easy to run a single container on a machine. However, companies often have a large number of applications. The problem then arises of managing and scaling the containers to be deployed.

This is where container orchestrators come in. An orchestrator makes it easy to manage the deployment, monitoring, lifecycle, scaling and networking of containers. These orchestrators can be configured on on-premise machines or through services made available by Cloud providers. In the latter case, they are easy to set up and configure, as they are managed by the Cloud provider. The most widely used orchestrator technology in companies is Kubernetes. There are also a number of products based on it, such as OpenShift. Other alternatives, such as Docker Swarn, also enable orchestration.

In some cases, there may be a need to manage and scale containers, all without managing the infrastructure. For this purpose, Cloud providers have made available services that enable containers to be run in a managed way. All the user has to do is specify a few configuration points. This type of service is called CaaS (Container as a Service).

The following infographic summarizes the execution models and the names of the technologies or services:

This wide variety of deployment modes means that the container can be adapted to suit business needs. It’s important to remember that the security of a container at runtime also depends on the security of its infrastructure.

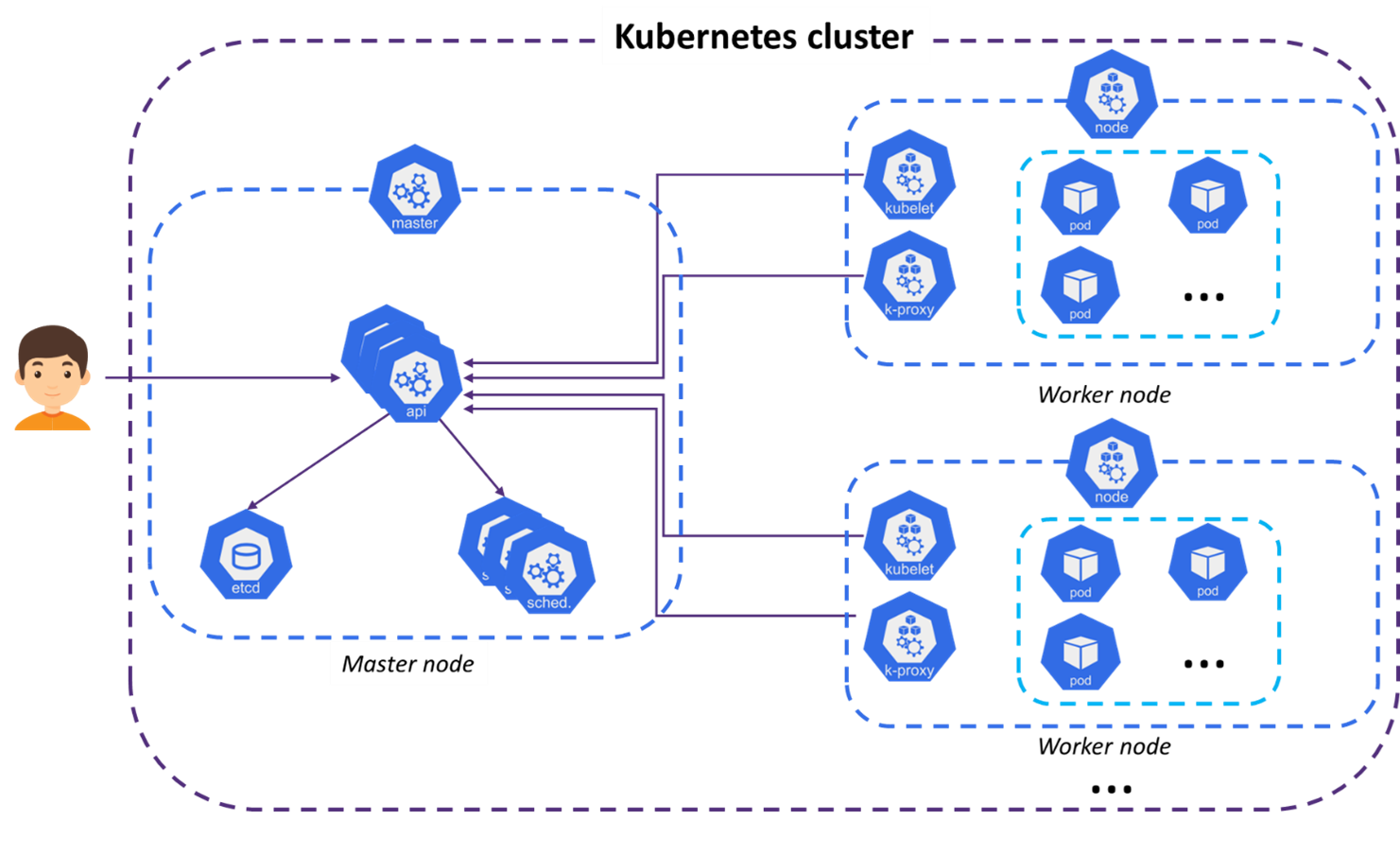

Focus on the Kubernetes orchestrator

As previously stated, Kubernetes and products based on this technology for orchestration are the most widespread. Kubernetes will be used to illustrate how an orchestrator works. To put it simply, let’s take the analogy of a container port.

Let’s start with the worker nodes. These will be our container ships. Their role is to carry the load, i.e., to execute the orchestrator’s containers.

Kubernetes then introduces the concept of pods. A pod will be the containers on the ships. A pod is generally made up of a single container. It is this component that runs the application to be deployed.

Next, we have the control plane, made up of master nodes. These are represented by the cranes that dispatch the containers from one boat to another, according to the load each boat can accommodate. In Kubernetes technical terms, the master node will decide on which worker node(s) to execute pods. The master node is the cluster’s central point. It contains all the cluster’s intelligence. It’s also with this node that we interact to administer the cluster, and it’s with this node that the worker nodes interact to know what actions to perform according to the pods they’re executing (create new ones, destroy them…).

Finally, there’s a load balancer, represented in this analogy by the trucks carrying the containers. The load balancer distributes the load of incoming flows between pods. For example, if three pods are hosting the same application, the load balancer will distribute requests between the 3 pods, so as not to overload any one of them. The load balancer is the interface between the cluster and the outside world, just as trucks are the link to the outside of the port.

Here is a more traditional technical diagram showing the various components:

The following resource from the Kubernetes documentation describes the set of components.[2]

How can we secure containers at every stage of their lifecycle?

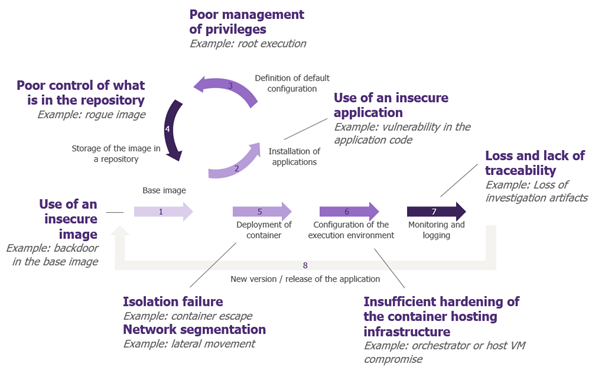

Now that we’ve covered the basics, let’s take a look at how to secure it all. Security must be applied to every stage of a container’s lifecycle. Indeed, each stage presents its own challenges and associated security impacts.

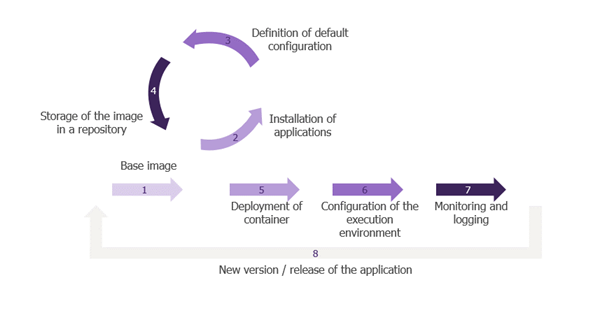

The image is first built

The first step in the container lifecycle is to choose a base image. A container image is a set of lightweight software and files that includes everything needed to run an application: code, runtime, system tools, system libraries and parameters. In most cases, this image is retrieved from the Internet. There is therefore a risk of using an image from an unknown source that has already been compromised (with a backdoor, for example).

So, in this first stage, it’s vital to choose the source of your image carefully, to ensure that you take a “trusted image”. This can be achieved by using reference sources such as Docker Hub, or by creating your own image catalogue. In the latter case, the images are verified and validated upstream by the company’s security teams and are known as “golden images”.

The second step is to install an application on the image. There is therefore a classic risk of a vulnerability in the application code. Vulnerability scans, developer awareness and adherence to good development practices are essential here to prevent a vulnerability from creeping into the application code.

The third step is image configuration. These are default configurations applied when containers are deployed. For example, a container is run with the root (or system administrator) account by default: leaving this configuration unchanged represents a risk should the container be compromised. Furthermore, setting the container’s file system to read-only also limits the impact of a compromise. Indeed, with these two configurations, an attacker will have less free rein for his actions.

The image is then stored in a container repository

Once the image has been built, it needs to be stored so that it can be accessed and deployed as many times as required. To do this, we use a container repository, which also needs to be secured. Indeed, if an attacker pushes a corrupted image into the container repository, it can be deployed in production.

Several security measures can be implemented to secure the container repository:

- Restrict user or resource rights and permissions on the repository to reduce risk: only people or resources who need to “push” or “pull” an image from the repository should be entitled to do so.

- Restrict network exposure.

- Scan images before they are deposited, at the time of push. This action limits the presence of compromised images on the container repository.

- Sign pushed images to ensure their integrity.

- Keep a record of actions carried out on the container repository.

This is followed by the image deployment phase

Once the image has been built and stored, it needs to be deployed to make it accessible.

When a container is deployed, configurations are determined according to use cases. Some configurations reduce the existing logical isolation between containers and the host. For example, you can authorize a container to list the host’s processes or share the same network card. Privileged configuration can even break down these isolation barriers, giving containers access to all host functions. These configurations, some of which are dangerous, can lead to container escapes: i.e., an attacker on a container can use these privileges to escape to the operating system. Once on the operating system, an attacker can obtain information from host files or initiate lateral moves. In other words, it’s one step further into the information system.

In terms of deployment recommendations, the first step is to restrict container repositories to a known and trusted list. Subsequently, configurations such as AppArmor, Seccomp or the deactivation of Linux capabilities can be used to restrict system calls and resources used by containers. Finally, the container file system should be configured as read-only, and the principle of least privilege applied to configurations passed to containers. In other words, it’s necessary to limit the use of privileged configuration or the breaking of certain isolations (process, network, etc.).

Finally, the container is executed

When it comes to execution, we’re going to focus on the methods favoured by enterprises. That is, orchestrators, often with Kubernetes, or container hosting services in the cloud, known as CaaS.

In the case of Kubernetes orchestration, the first objective will be to verify the conformity of container deployments, in order to avoid the deployment of privileged dangerous containers. These may be the result of an attack or simply administrative errors. Depending on the platform, this may involve PodSecurityAdmission, SecurityContextConstraint or external tools such as OPA Gatekeeper.

It is also recommended to restrict network flows within the cluster, between containers, and out of the cluster to restrict lateral movements. This restriction can be applied with NetworkPolicy or again with external micro-segmentation tools. Finally, it will be necessary to have fine-grained role and user management, and to apply sufficient hardening to the virtual machines serving as nodes.

In the case of CaaS, the infrastructure is managed by the cloud provider. As a user, hardening can only be achieved by enabling or disabling certain options. An analysis of each solution will be necessary to define precise recommendations, as Azure, Google Cloud Platform and Amazon Web Services all offer different options.

Eventually, monitor all stages

Container monitoring is important for debugging purposes and for recovering evidence in the event of an incident. Unfortunately, unlike a virtual machine, a container is ephemeral. So are its logs… So how do you go about it?

Monitoring can be carried out at three levels:

- At container level, by outsourcing logs (to combat the ephemeral nature of containers and their logs)

- at container workload level

- Infrastructure level (cluster nodes, for example)

This collected logging can be managed by dedicated SOC Cloud teams or centralized in the company’s SIEM. Detection scenarios can then be created to detect IAM modifications, abnormal resource consumption and so on.

It’s worth mentioning that CaaS solutions and Kubernetes managed by a Cloud provider (AKS, EKS, GKE, …) make it easy to centralize and externalize these logs.

This section covered the best practices to be followed and the risks associated with each stage in a container’s life cycle. The diagram below provides a summary:

CWPP, the solution to our problems?

CWPP, Cloud Workload Protection Platform, is a new tool we’re hearing a lot about at the moment. But what does it do?

A CWPP is a tool for monitoring and detecting threats to workloads, i.e., all services running in the cloud, and in particular containers. It helps to ensure security throughout the lifecycle described above. It is particularly useful for detecting secrets and vulnerabilities in application libraries, reviewing repository access, checking configurations, and managing monitoring (log collection, detection, and remediation).

Like all tools, CWPP is not magic. It will need to be deployed with or without an agent, depending on the scenarios you wish to cover. But beyond the technical aspect of deployment, it will be necessary to integrate it into the company’s processes, so that all players have a tool enabling them to optimize security. We must therefore not underestimate the work involved in defining strategy, new processes, and support for change, as well as the integration of the tool with the tools used by developers. For example, a developer will want to be informed that they need to remediate a container on their incident management tool (JIRA, issue in the project Git…) and be able to test their new container from their machine before even pushing it into the container repository.

The functionalities of a CWPP are often already partially or fully covered by existing tools, and its implementation can help centralize vision and sometimes optimize licensing costs.

Key elements of container security

As you can see from this article, containers were born for infrastructure needs. Their lightness and flexibility make them a perfect asset for today’s application needs. The implementation of containers mean that new attack surfaces need to be protected, and that container security needs to be taken into account.

Unfortunately, there is no single tool or best practice to follow. In fact, as the article illustrates, it’s a combination of elements that make it possible to secure these application boxes. Among the best practices to be observed, the following 5 points are the key elements to remember:

- Control images: by using a hardened trusted image, securing source code, and performing vulnerability scans.

- Secure container isolation: by avoiding dangerous configurations when deploying containers and by hardening images.

- Ensure network segmentation: by restricting the cluster’s external exposure, flows within the cluster and out of the cluster.

- Monitoring and detection: by retrieving logs at 3 different levels and setting up detection scenarios

- Secure IAM access: by applying fine-grained IAM management on the cluster or on the Cloud provider. This management can be accompanied by periodic reviews.

[1] https://www.lemondeinformatique.fr/actualites/lire-l-usage-des-containers-en-production-bondit-a-84-78347.html

[2] https://kubernetes.io/docs/concepts/overview/components/