While AI has proven to be highly effective at increasing productivity in business environments, the next step in its evolution involves enhancing its autonomy and enabling it to perform actions independently. To this end, one notable development in the AI landscape is the uptick in use of Agentic AI, with Gartner naming it the top strategic technology trend for 2025. Whereas traditional AI typically follows rules and algorithms with a minimal level of autonomy, AI Agents are able to autonomously plan their actions based on their understanding of the environment, in order to achieve a set of objectives within their scope of actions. The boom in AI agents is a direct result of the integration of LLMs into their core systems, allowing them to process complex inputs, expanding their capability for autonomous decision making.

The projected impact of agentic AI is significant. By 2028, it could automate 15% of routine[1] decision-making and be embedded in a third of enterprise applications, up from virtually none today. At the same time, perceptions of risk are shifting. In early 2024, Gartner surveyed 345 senior risk executives and identified malicious AI-driven activity and misinformation as the top two emerging threats[2]. Yet despite these concerns, organisations are accelerating adoption. By 2029, agentic AI could autonomously resolve up to 80% of common customer service issues, reducing costs by as much as 30%[3]. This tension, between the growing promise of agentic AI and the expanding risk surface it introduces, raises a critical question:

“How can organisations securely deploy agentic AI at scale, balancing innovation with accountability, and automation with control?”

This article explores that question, outlining key risks, security principles, and practical guidance to help CISOs and technology leaders navigate the next wave of AI adoption.

An AI agent is an autonomous AI system in the decision-making process

In AI systems, agents are designed to process external stimuli and respond through specific actions. The capabilities of these agents can vary significantly, especially depending on whether they are powered by LLMs.

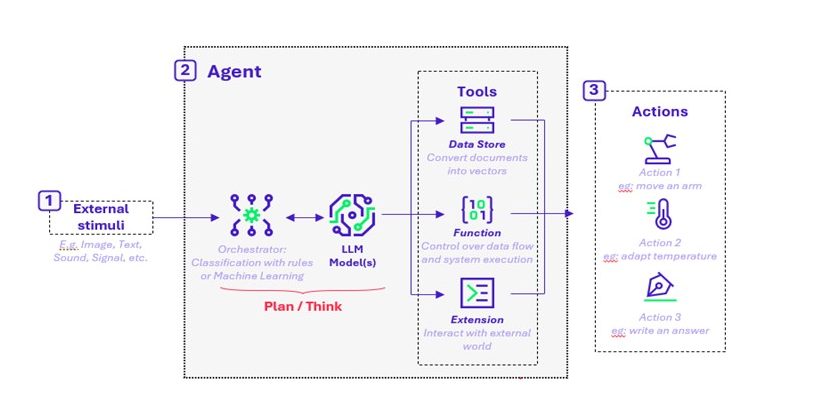

Figure 1: A diagram to show the different constituent parts of an LLM-enabled agent, showing 1) external stimuli, 2) the agents core processes (reasoning and tools) and 3) the agent’s actions

Traditional agents typically follow a rule-based or pre-programmed workflow: they receive input, classify it, and execute a predefined action. In contrast, agentic AI introduces a new dimension by incorporating LLMs to perform reasoning and decision-making between perception and action. This, with only few words to configure it. This enables more flexible, context-aware responses, and in many cases, allows AI agents to behave more like human intermediaries.

As illustrated in Figure 1, the agentic AI workflow unfolds in several stages:

- Perception: The AI agent receives external stimuli, such as text, images, or sound.

- Reasoning: These inputs are processed through an orchestration layer, which transforms them into structured formats using classification rules and machine learning techniques.

Here, the LLM plays a central role. It adds a layer of adaptive thinking that enables the agent to analyse context, select tools, query external data sources, and plan multi-step actions.

- Action: With refined data and a reasoning layer applied, the agent executes complex tasks, often with greater autonomy than traditional systems.

This architecture gives agentic AI the ability to operate across dynamic environments, adapt in real time, and coordinate with other agents or systems, a key differentiator from earlier, more static automation.

In summary, AI agents with LLM capabilities can perform more complex actions by applying “AI reasoning” to transformed and refined data, making them more powerful and versatile than traditional agents.

Field insights on Agentic AI use-cases in client environments

Businesses have rightfully recognised the potential of these AI agents in a variety of use cases, ranging from the simple, to the more complex. We will now take a deeper look at some of the different common use cases across these different levels of agent autonomy.

Basic Use Cases: Chatbot/Virtual Agents

AI agents can be configured to provide instant answers to complex questions and can be designed to only answer from certain information repositories. This allows them to smoothly and effectively guide users through extensive SharePoint libraries or other document repositories. Acting as both a search function and an assistant, these agents can dramatically improve the productivity of employees by reducing the time spent searching for information and ensuring that users have quick access to the data they need. For example, a chatbot integrated into SharePoint can help employees locate specific documents, understand company policies, or even assist with onboarding processes by providing relevant information and resources. These agents have no autonomy, and only directly respond to requests as they are made by users.

Intermediate Use Cases: Routine Task Automation

Agents can be used to streamline repetitive tasks such as managing scheduling, processing customer enquiries, and handling transactions. These agents can be designed to follow specified processes and workflows, offering significant advantages over humans by reducing human error and increasing productivity. For instance, an AI agent can automatically schedule meetings by coordinating with participants’ calendars, send reminders, and process routine customer service requests such as order tracking or account updates. This automation not only saves time but also ensures consistency and accuracy in task execution. Additionally, by handling routine tasks, AI agents free up human employees to focus on more complex and strategic activities, thereby contributing to higher efficiency and productivity within the organisation.

Advanced Use Cases: Complex data analysis & vulnerability management

Agents can also be used for more complex use cases, specifically in a security context. For example, Microsoft has recently announced the release of AI agents as part of their security copilot offering, with previews releasing in April 2025. One particularly interesting use case is regarding vulnerability remediation agents. These agents will work within Microsoft Intune to monitor endpoints for vulnerabilities, assess these vulnerabilities for potential risks and impacts, and then produce a prioritised list of remediation actions. This provides a large increase in productivity for security teams, as they can then focus on the most critical issues and streamline the decision-making process. By automating the identification and prioritisation of vulnerabilities, these agents help ensure that security teams can address the most pressing threats promptly, reducing the risk of security breaches and improving overall security posture.

The promise of intelligent automation and cost efficiency is compelling, but it also introduces a strategic trade-off. CISOs will face the growing challenge of securing increasingly autonomous systems. Without robust guardrails, organisations expose themselves to operational disruption, governance failures, and reputational damage. Transparency, asset visibility, and cloud security are areas which will also require heightened vigilance and a proactive security posture. The benefits are clear, but so are the risks. Without a security-first approach, agentic AI could quickly become a liability for organisations as much as an asset.

Risks mainly known but with increased likelihood and impact

Agentic AI introduces a new level of security complexity. Unlike traditional AI systems, where threat surfaces are generally limited to inputs, model behaviour, outputs, and infrastructure, agentic AI systems operate across dynamic, autonomous chains of interaction. This covers exchanges such as agent-to-agent, agent-to-human, and human-to-agent, many of which are difficult to trace, monitor, or control in real time. As a result, the security perimeter expands beyond static models to encompass unpredictable behaviours and interactions.

Recent work by OWASP on Agents’ security[4] highlights the breadth of threats facing AI systems today. These risks span multiple domains:

- Some are traditional cybersecurity risks (e.g., data extraction, and supply chain attacks),

- Others are general GenAI risks (e.g., hallucinations, model poisonning),

- A third emerging category relates specifically to agents’ autonomy in realising actions in real world.

In addition to traditional risks, agentic AI systems introduce new security threats, such as data exfiltration through agent-driven workflows, unauthorised or unintended code execution, and “agent hijacking,” where agents are manipulated to perform harmful or malicious actions. These risks are amplified by the way many agentic AI applications are built today. Around 90% of current AI agent use cases rely on low-code platforms, prized for their speed and flexibility. However, these platforms often depend heavily on third-party libraries and components, introducing significant supply chain vulnerabilities and further expanding the overall attack surface.

Agentic AI represents a shift from passive prediction to action-oriented intelligence, enabling more advanced automation and interactive workflows. As organisations deploy networks of interacting agents, the systems become more complex, and their exposure to security risks increases. With more interfaces and autonomous exchanges, it becomes essential to establish strong security foundations early. A critical first step is mapping agent activities to maintain transparency, support effective auditing, and enable meaningful oversight.

Security Best Practices

- Activity Mapping & Security Audits

Since AI agents operate autonomously and interact with other systems, mapping all agent activities, processes, connections, and data flows is crucial. This visibility enables the detection of anomalies and ensures alignment with security policies.

Regular audits are vital for identifying vulnerabilities, ensuring compliance, and preventing shadow AI where agents act without oversight. Unauthorised agents can expose systems to significant risks, and shadow AI, especially unsanctioned models, pose major data security threats. Auditing decision-making processes, data access, and agent interactions, along with maintaining an immutable audit trail, supports overall accountability and traceability.

To mitigate these risks, organisations should adopt clear governance policies, comprehensive training, and effective detection strategies. These practices should be backed by a strong library of AI controls and data governance policies. However, audits and governance alone aren’t enough. Robust access controls for AI agents are necessary to restrict actions and protect the system’s integrity.

2. AI Filtering

To avoid the agent performing inappropriate actions, the first step is to ensure that its decision-making system is protected. One of the most efficient ways is by filtering potentially malicious inputs and outputs of the Decision-Maker, often composed of an orchestrator & an LLM.

Several technical ways to perform AI filtering:

Keyword filtering – Medium-Low Efficiency: Prevent the LLM from considering any input containing specified keywords and from generating any output containing these keywords.

- Pro: Quick win, particularly on the outputs, for example preventing a chatbot from generating any rude words.

- Con: Can easily be bypassed by using obfuscated inputs or requiring obfuscated outputs. For example, “p@ssword” or “p,a,s,s,w,o,r,d” can be ways to bypass the keyword “password”

LLM as-a-judge – High Efficiency: Ask to the LLM to analyse both inputs & outputs and identify if they are malicious.

- Pro: Extend the analysis to the whole answer.

- Con: Can be bypassed by overflowing the agent’s inputs, so it has trouble dealing with the whole input.

AI Classification – Very-High Efficiency: Define categories of topic that the LLM can answer or not. It can be done through whitelisting (the LLM can answer to only some categories of topics) and blacklisting (the LLM cannot answer to some precise categories of topics). Use a specialised AI system to analyse each input and output.

- Pro: Ensure the agent’s alignment by not letting it receive inputs on topics it should not be able to answer.

- Con: High cost, as it requires additional LLM analysis.

These filtering actions need to be performed for the users’ inputs, but sometimes also for the data retrieved from external sources (they can be poisoned).

3. AI-specific Security Measures

Human-in-the-loop (HITL) oversight is essential for ensuring the responsible and secure operation of agentic AI. While AI agents can autonomously perform tasks, human review in high-risk or ethically sensitive situations provides an extra layer of judgment and accountability. This oversight helps prevent errors, biases, and unintended consequences, while allowing organisations to intervene when AI actions deviate from guidelines or ethical standards. HITL also fosters trust in AI systems and ensures alignment with business objectives and regulatory requirements. To maximise the benefits of automation, a hybrid AI-human approach is critical, supported by ongoing training to address compliance and inherent risks.

Some actions may be strictly forbidden to the agent, some should require human validation, and some could be done without human supervision. These actions should be determined through classical risk analysis, based on the agent’s impact & autonomy.

Triggers should be set-up to determine if and when human validation is needed. This can be set-up in the LLM Master Prompt, and access can be restricted by using an appropriate IAM model.

4. Access Controls & IAM

As AI agents take on more active roles in enterprise workflows, they must be managed as non-human identities (NHIs), with their own identity lifecycle, access permissions, and governance policies. Accordingly, this requires integrating agents into existing identity and IAM frameworks, applying the same rigor used for human users.

Managing AI agents introduces new requirements. When acting on behalf of end-users, agents must be constrained to operate strictly within the permissions of those users, without exceeding or retaining elevated privileges. To achieve this, organisations should enforce key IAM principles:

- Just Enough Access (JEA): Limit agents to the minimum set of permissions required to complete specific tasks.

- Just in Time (JIT) access: Provision access temporarily and contextually to reduce standing privileges and exposure.

- Segregation of duties and scoped credentials: Define clear boundaries between roles and prevent unauthorised privilege escalation.

In addition, to further enhance control, security teams should implement real-time anomaly detection to monitor agent behaviour, flag policy violations, and automatically remediate or escalate issues when necessary.

Access to sensitive data must also be tightly restricted. Violations should trigger immediate revocation of privileges and deny lists should be used to block known malicious patterns or endpoints.

Ultimately, while technical controls are essential, they should be supported by human oversight and governance mechanisms, particularly when agents operate in high-impact or sensitive contexts. IAM for agentic AI must evolve in step with these systems’ increasing autonomy and integration into critical business functions.

5. AI Crisis Response & Red teaming

While AI-specific controls are essential, traditional measures like crisis management must also extend into the AI landscape. As cyberattacks become more sophisticated, organisations should consider crisis management strategies for potential AI failures or compromises; by ensuring all teams such as AI scientists, operational teams, and security teams are equipped to respond quickly and effectively to minimise disruption.

Concrete guidelines for CISOs

This year CISOs will be exposed to increased threats introduced by agentic AI alongside ongoing regulatory pressure from complex regulations such as DORA, NIS 2 and the AI Act. Both CISOs and CTOs will collaborate closely, with CISOs overseeing the secure deployment of AI systems to ensure that agent interactions are carefully mapped and secured to safeguard the security of their organisations, workforce and customers.

Key starting points for CISOs:

- Limit access to AI agents by enforcing strong access controls and aligning with existing IAM policies.

- Monitor agent behaviour by tracking activity and conducting regular audits to identify vulnerabilities.

- Filter the agent’s inputs and outputs to ensure that the decision-maker does not launch any unwilled action.

- Implement Human-in-the-Loop oversight to validate AI outputs for critical decisions/tasks.

- Provide agentic AI awareness training to educate employees on the risks, security best practices and identifying potential attacks.

- Perform AI red teaming on the agent, to identify potential weaknesses.

- Despite all security measures, AI operates on probabilistic principles rather than deterministic ones. This means that the agent might occasionally behave inappropriately. Therefore, it’s crucial to establish clear accountability for any wrongful actions taken by AI agents.

- Prepare for AI crises early by initiating discussions with relevant teams to ensure a coordinated response if an incident occurs.

Over the past several years, Wavestone has observed a marked increase in client maturity around AI security. Many organisations have already implemented robust processes to assess the sensitivity of AI initiatives and to manage associated risks. These early efforts have proven valuable in reducing exposure and strengthening governance.

While agentic AI does not fundamentally rewrite the AI security playbook, it does introduce a meaningful shift in the risk landscape. Its inherently autonomous, interconnected nature increases both the impact and likelihood of certain threats. The complexity of these systems can be challenging at first, but they are manageable. With a clear understanding of these dynamics and the emergence of new market standards and security protocols, agentic AI can deliver on its transformative potential.

As this transition unfolds, we remain committed to helping CISOs and their teams navigate the evolving risk environment with confidence.

References

[1] Orlando, Fla., Gartner Identifies the Top 10 Strategic Technology Trends for 2025, October 21, 2024. https://www.gartner.com/en/newsroom/press-releases/2024-10-21-gartner-identifies-the-top-10-strategic-technology-trends-for-2025

[2] Stamford, Conn., Gartner Predicts Agentic AI Will Autonomously Resolve 80% of Common Customer Service Issues Without Human Intervention by 2029, March 5, 2025. https://www.gartner.com/en/newsroom/press-releases/2025-03-05-gartner-predicts-agentic-ai-will-autonomously-resolve-80-percent-of-common-customer-service-issues-without-human-intervention-by-20290

[3] Stamford, Conn. Gartner Survey Shows AI-Enhanced Malicious Attacks Are a New Top Emerging Risk for Enterprises, May 22, 2024. https://www.gartner.com/en/newsroom/press-releases/2024-05-22-gartner-survey-shows-ai-enhanced-malicious-attacks-are-new0

[4] OWASP, OWASP Top 10 threats and mitigation for AI Agents, 2025. OWASP-Agentic-AI/README.md at main · precize/OWASP-Agentic-AI · GitHub

Thank you to Leina HATCH for her valuable assistance in writing this article.