A deepfake is a form of synthetic content that emerged in 2017, leveraging artificial intelligence to create or manipulate text, images, videos, and audio with high realism. Initially, these technologies were used for entertainment or as demonstrations of future capabilities. However, their malicious misuse now overshadows these original purposes, representing a growing threat and a significant challenge to digital trust.

Malicious uses of deepfakes can be grouped into three main categories:

- Disinformation and enhanced phishing: Falsified videos with carefully crafted messages can be exploited to manipulate public opinion, influence political debates, or spread false information. These videos may prompt targets to click on phishing links, increasing the credibility of attacks. Such identity theft has already targeted public figures and company CEOs, sometimes encouraging fraudulent investments.

- CEO fraud and social engineering: Traditional telephone scams and CEO fraud are harder to detect when attackers use deepfakes to imitate an executive’s voice or fully impersonate someone (face and voice) to obtain sensitive information. Such live identity theft scams, especially via videoconferencing, have already resulted in significant financial losses, as seen in Hong Kong in early 20241.

- Identity theft to circumvent KYC solutions2 : Increasingly, applications, especially in banking, use real-time facial verification for identity checks. By digitally altering the facial image submitted, malicious actors can impersonate others during these verification processes.

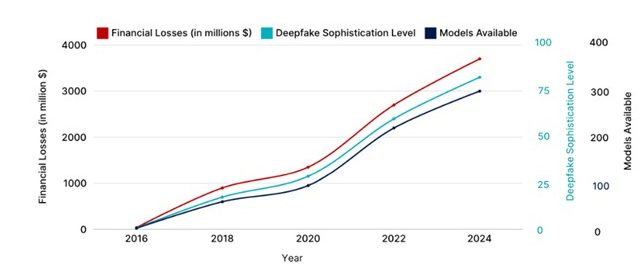

The rapid growth of generative artificial intelligence has led to a steady increase in both the number and sophistication of deepfake generation models. It is increasingly common for companies to suffer such attacks (as evidenced by our latest CERT-W annual report ) and increasingly difficult to detect and counter them.

Figure1 – Increase in deepfake technologies and resulting financial losses

Figure1 – Increase in deepfake technologies and resulting financial losses

Humans remain the primary target and therefore the first line of defense in the information system against this type of attack. However, we have seen a significant evolution in the maturity of these technologies over the past year, and it is becoming increasingly difficult to distinguish between what is real and what is fake with the naked eye.

After supporting many companies with employee training and awareness, we saw the need to analyze tools that could strengthen their defenses. Having reliable deepfake detection solutions is no longer just a technical issue: it is a necessity to protect IT systems against intrusions, maintain trust in digital exchanges, and preserve the reputation of individuals and companies.

Our Radar of deepfake detection solutions presents about 30 mature providers we have tested rigorously, allowing us to identify initial trends in this emerging market.

For our technical tests, some stakeholders provided versions of their solutions deployed in environments similar to those used by their customers. We then built a database of multiple deepfake content of various types: media type (audio only, image, video, live interaction); format (sample size, duration, extension) and deepfake tools used to generate these samples:

To best extract market trends from these tests, we considered three distinct evaluation criteria:

- Performance (deepfake detection capability, accuracy of false positive results, response time, etc.)

- Deployment (ease of integration into a client environment, deployment support and documentation)

- User experience (understanding of results, ease of use of the tool, etc.)

An emerging market that has already proven itself in real-world conditions

Two different technologies to achieve the same goal

We first categorized the different solutions offered according to the type of content detected:

- 56% of solutions detect based on visual media data (image, video)

- 50% of solutions opt for detection based on audio data (simple audio file or audio from a video)

This balanced distribution of content types enabled us to compare the performance of each technology. While most of the solutions developed rely on artificial intelligence models trained to classify AI-generated content, the processing of a visual file (such as a photo) or an audio file (such as an MP3) differs greatly in the types of AI models used. We could therefore expect differences in performance between these two technologies.

However, our technical tests show that the accuracy of the solutions is relatively similar for both image and audio processing.

|

92.5% Deepfake images or videos were detected as malicious by image processing solutions |

VS |

96 Deepfake audio sources were detected as malicious by solutions processing audio. |

We also identified leading providers developing live audio and video deepfake detection, capable of processing sources in under 10 seconds, which addresses today’s most dangerous attack vectors.

|

19% |

Solutions offer live detection of deepfakes, integrated into videoconferencing software or devices |

These solutions, which mainly process audio, achieved an accuracy score of 73% of deepfakes detected as such. This shows the potential for improvement for these young players in detecting state-of-the-art live attacks.

From PoC to deployment at scale, a step already taken by some

The maturity of solutions also varies on our radar. While some providers are start-ups emerging to meet this specific need, others are not new to the market. In fact, some of the companies we met had their core business in other areas before entering this market (we can mention biometric identification, artificial intelligence tools, and even AI-powered multimedia content generators!). These players therefore have the knowledge and experience to offer their customers a packaged service that can be deployed on a large scale, as well as post-deployment support.

Younger startups are also maturing and moving beyond the PoC phase by offering companies a range of deployment options:

- API requests, which can be integrated into other software, remain the preferred way to call on the services of tools that enable deepfake detection.

- Comprehensive SaaS GUI6 platforms. Some of these platforms have already been deployed on-premises in certain contexts, particularly in the banking and insurance sectors.

- On-device Docker containers, which allow plug-ins to be added to audio and video devices or videoconferencing software for integration tailored to specific detection needs.

Use cases for deepfake detection solutions: trends and developments

Use cases specific to critical business needs that require protection

To meet diverse market needs, solution providers have specialized in specific use cases. In addition to answering the question “deepfake or original content?”, some providers are developing and offering additional features to target specific uses for their solutions.

We have grouped the various offerings from providers into broad categories to help us understand market trends:

- KYC and identity verification: in banking onboarding or online account opening processes, deepfake detection makes it possible to distinguish between a real video of a user and an AI-generated imitation. This protects financial institutions against identity theft and money laundering. These solutions will be able to give “liveness” scores or match rates to the person being identified in order to refine detection.

- Social media watch and source identification: To prevent fake media or information from damaging their clients’ reputations, some solution providers have deployed watch on social media or multimedia content analysis tools for email attachments to enable rapid response. The features of these solutions make it possible to understand how and by which deepfake model this malicious content was produced, helping to trace the source of the attack.

- Falsified documents and insurance fraud: A number of players have turned their attention to combating insurance fraud and false identity documents. Their solutions seek to detect alterations in supporting documents or photos of damage by highlighting how and which parts of the original image have been modified.

- Detection of telephone scams and identity theft in video calls: these types of attacks are on the rise and rely on the creation of realistic imitations of a manager’s voice or face, in particular to deceive employees and obtain transfers or sensitive information. Most detection systems targeting these attacks have developed capabilities for full integration into video call software or sound cards on the devices to be protected.

Each solution is designed with specific features aligned with market needs to maximize the relevance and operational effectiveness of detection solutions.

Open source as the initiator, proprietary solutions to take over

While proprietary solutions dominate, open-source approaches also play a role in this field. These initiatives play an important role in academic research and experimentation, but they often remain less effective and less robust in the face of sophisticated deepfakes.

While some offer very good results on controlled test benches ( up to 90% detection performance7 ), proprietary solutions offered by specialized publishers generally offer better performance in production. They also stand out in terms of support: regular updates, technical support, and maintenance services, which are essential for critical environments such as finance, insurance, and public sector. This difference is gradually creating a gap between open source research and commercial offerings, where reliability and integration into complex environments are becoming key selling points.

False positives: the remaining challenge

Many vendors emphasize their deepfake detection capabilities. We felt it was important to extend our testing to understand how these solutions perform on false positives: is real content detected as natural content or as deepfake content?

The evaluations we conducted on several detection solutions highlight contrasting results depending on the type of content.

- For images and video: nearly 40% of the solutions tested still have difficulty correctly managing false positives. With these solutions, between 50% and 70% of the real images analyzed are considered deepfakes. This limits their reliability, especially when they are subjected to large amounts of content.

- On the audio side, the solutions stand out with more robust performance on false positives: only 7%. Only a few particularly altered (but non-AI) or poor-quality samples were detected as deepfakes by some solutions.

To address these issues, some vendors are combining image/video and audio processing. Currently, these modalities are usually scored separately, but efforts are underway to integrate their results for greater accuracy. Some publishers are working on ways to use these two scores more complementarily to limit false positives.

What does the future hold for deepfake detection?

Current solutions are effective under most present conditions. However, as technologies and attack methods rapidly evolve, vendors will face two major challenges.

The first challenge is detecting content from unknown generative tools. While most solutions handle common technologies well, their performance drops with newer, less-documented methods.

The second challenge is real-time detection. Currently, only 19% of solutions offer this feature, and their performance is still insufficient to meet future needs. In contrast, notable progress is already being made in audio detection, which is emerging as a promising advance for enhancing security in critical scenarios involving phishing or CEO fraud via deepfake audio calls.

The market maturity of these cutting-edge technologies is accelerating, and there is every reason to believe that detection solutions will quickly catch up with the latest advances in deepfake creation. The next few years will be decisive in seeing the emergence of more reliable, faster tools that are better integrated with business needs.