We have seen in the first part of the article the risks that represent the deepfakes for the businesses. In this part, we are going to focus on the strategies available to pre-empt deepfakes and the concrete actions to implement as of now to reduce their risks.

DIFFERENT STRATEGIES TO safeguard AGAINST DEEPFAKES

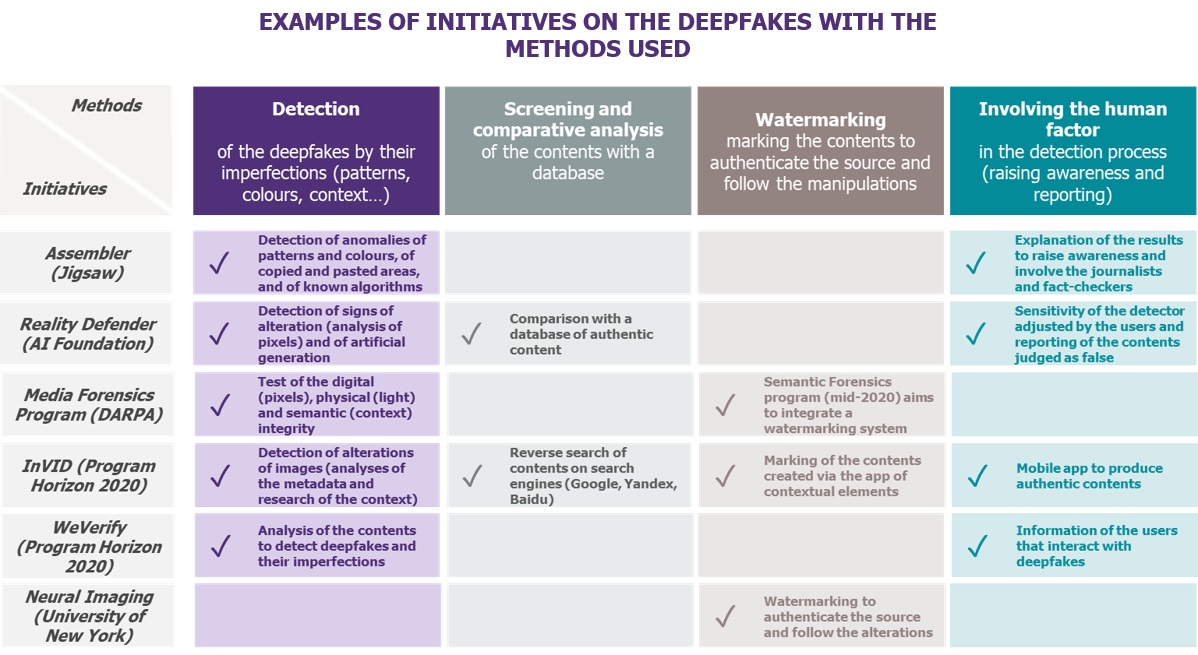

Concurrently with the legal framework, public and private organisations get organised to put forward solutions allowing to detect and prevent the malicious spread of deepfakes. We can distinguish four strategies to safeguard against deepfakes.

1/ Detecting the imperfections

Detecting the deepfakes by their imperfections is one of the main existing methods. Some irregularities remain in the generated contents, such as the lack of blinks and of synchronisation between the lips and the voice, distortions of the face and accessories (arms of the glasses), or the inaccuracy of the context (weather, location).

The deepfakes are however built to learn from their mistakes and generate a content that is increasingly alike the original, making the imperfections less perceptible. The tools using this deepfake detection strategy can be effective but require a constant improvement to detect ever more subtle anomalies.

We can cite in this category Assembler, a tool intended for journalists developed by Jigsaw (branch of Alphabet, parent company of Google). It enables to verify the authenticity of contents through their analysis via five detectors, amongst which the detection of anomalies of patterns and colours, of copied and pasted areas, and of known characteristics of deepfakes algorithms.

2/ Screening and comparative analysis

Comparing the contents with a database of authentic content or by looking for similar content on search engines to see whether they have been manipulated (for instance, by finding the same video with a different face) is another strategy allowing to pre-empt deepfakes.

In 2020, the AI Foundation should make available a plugin, Reality Defender, to integrate to web browsers and over time to social networks. It will allow the detection of manipulations of contents, targeting first the politicians. Users will be led to adjust the sensitivity of this tool, according to the manipulations they will want to detect or not, not to be notified for every manipulation of content, notably for the most ordinary manipulations (photo retouch on a web page done on Photoshop for example).

3/ Watermarking

A third method consists in marking the contents with a watermark, or digital tattoo, to facilitate the authentication process by filling in their source and following the manipulations undertaken on these contents.

A team from the New York University works on a research project to create a camera embedding a watermarking technology meant to mark the photographed contents, in order not only to authenticate the original photography, but also to mark and follow all the manipulations carried out on it throughout its lifecycle.

4/ Involving the human factor

Involving the users in the detection process allows both mitigating deepfakes’ impacts by making them realise that the alteration of the acceded contents is possible, and to reduce deepfakes’ occurrence by allowing them to report the ones they suspect.

The plugin Reality Defender already mentioned will give users the possibility to report the contents they judge as fake so as to inform the other users – which once added to the analysis realised by the tool, will be able to see if the contents have been reported by other users, offering a second level of indication.

Some initiatives carried by cooperation of cross-sector actors combine these four strategies for a maximal efficiency against deepfakes. Some are already used or tested by journalists. It is the case of InVID, initiative developed within the scope of the European Union Horizon 2020 program of financing of research and innovation, used by the French press agency (AFP).

Solutions and strategies are therefore emerging, the market is developing, and new innovative solutions should appear very shortly with the results of the Deepfake Detection Challenge. This contest anti-deepfake was launched by Facebook upon the approach of the American presidential election, and more than 2,600 teams signed up. Results the 22nd of April!

Below a table presenting examples of initiatives combining different strategies to safeguard against deepfakes.

Different means to protect one’s activity

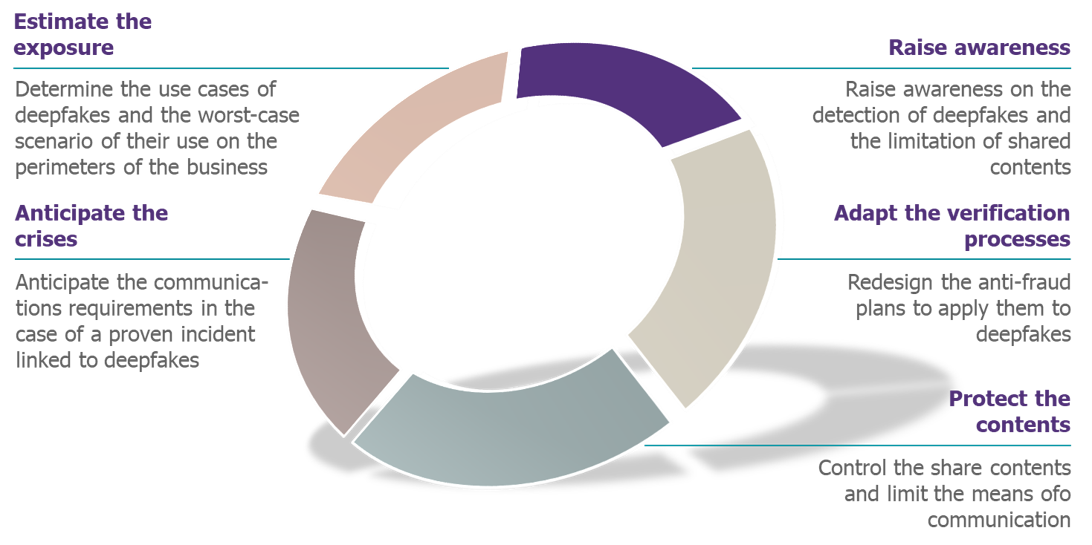

The risk deepfakes present for businesses is genuine, and a few actions can be taken to protect one’s activity and mitigate its impacts from now on.

- Estimating the exposure: The use cases of deepfakes and the worst-case scenario of their use must be determined on the perimeters of the company, taking the fraud and undermining risks into consideration, and identifying the appropriate security strategies.

- Raising awareness: The collaborators must be made aware of the detection of deepfakes (to avoid the cases of fraud) but also of the limitation of shared contents on social media that can be reused to create deepfakes (to avoid the undermining). Just like anti-phishing campaigns, this awareness campaign focuses both on the detection of technical faults (form) of the deepfakes (although they will be led to disappear with the improvement of techniques), but mostly on the detection of the suspicious nature of information (content), encouraging the audience’s suspicion, cross checking of information and notification of the suspicions to the appropriate teams (what to do if I see a suspect video of my head of communications on the social networks during the weekend? What to do if I receive a vocal message of my chief asking me to execute a punctual operation that is slightly out of my perimeter?).

- Adapting the verification processes: The existing anti-fraud plans can be redesigned to be applied to deepfakes. For instance, for a Fake President fraud via deepfakes, one of the recommendations is to suggest to the interlocutor to hang up and call him back (if possible on a known number, and after an internal check). For the most sensitive fraud scenarios, these reaction processes must be finely defined, and the concerned collaborators regularly trained to the reflexes to adopt. Tools such as the ones defined earlier can also be used to verify all or any part of the media used by the collaborators.

- Protect the contents: The contents representing collaborators shared internally or externally by the company can be controlled to avoid them being reused to generate deepfakes. Businesses can limit the diversity (angle of the people and types of media) of the data potentially usable by malicious actors, and play on the digital quality (definition) of the shared contents. In fact, the more the malicious actors benefit from diverse and good quality contents representing the collaborators, the more it facilitates their reuse to generate deepfakes. Moreover, businesses can limit their means of communication to an official channel, verified social networks and their official websites – which creates contents’ consumer habits for the audience, that will be suspicious of all diffusion out of these habits.

- Anticipate the crises: The communications requirements in the case of a proven incident linked to deepfakes must be anticipated, and the management of the deepfake case must include the “generic” communications scenarios addressed in the crisis communication plans.