- In 2023, Artificial Intelligence has received unprecedented media coverage. Why? ChatGPT, a generative artificial intelligence capable of answering questions with astonishing precision. The potential uses are numerous and go beyond current comprehension. So much so that some members of the scientific and industrial communities are suggesting that we need to take a six-month break from AI research to reflect on the transformation occurring in our society.

As part of its commitment to supporting the digital transformation of its clients while limiting the risks involved, Wavestone’s Cyber teams invites you to discover how cyber-attacks can be carried out on an AI system and how to protect against them.

Attacking an internal AI system (our CISO hates us)

Approach and objectives

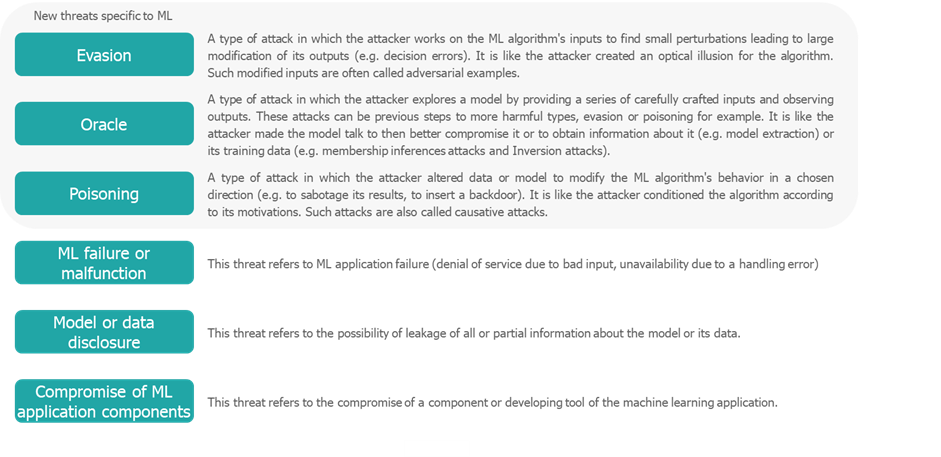

As demonstrated by recent work on AI[1] systems by ENISA[2] and NIST[3], AI is vulnerable to a number of cyber threats. These threats can be generic or specific, but impact all AI systems based on Machine Learning.

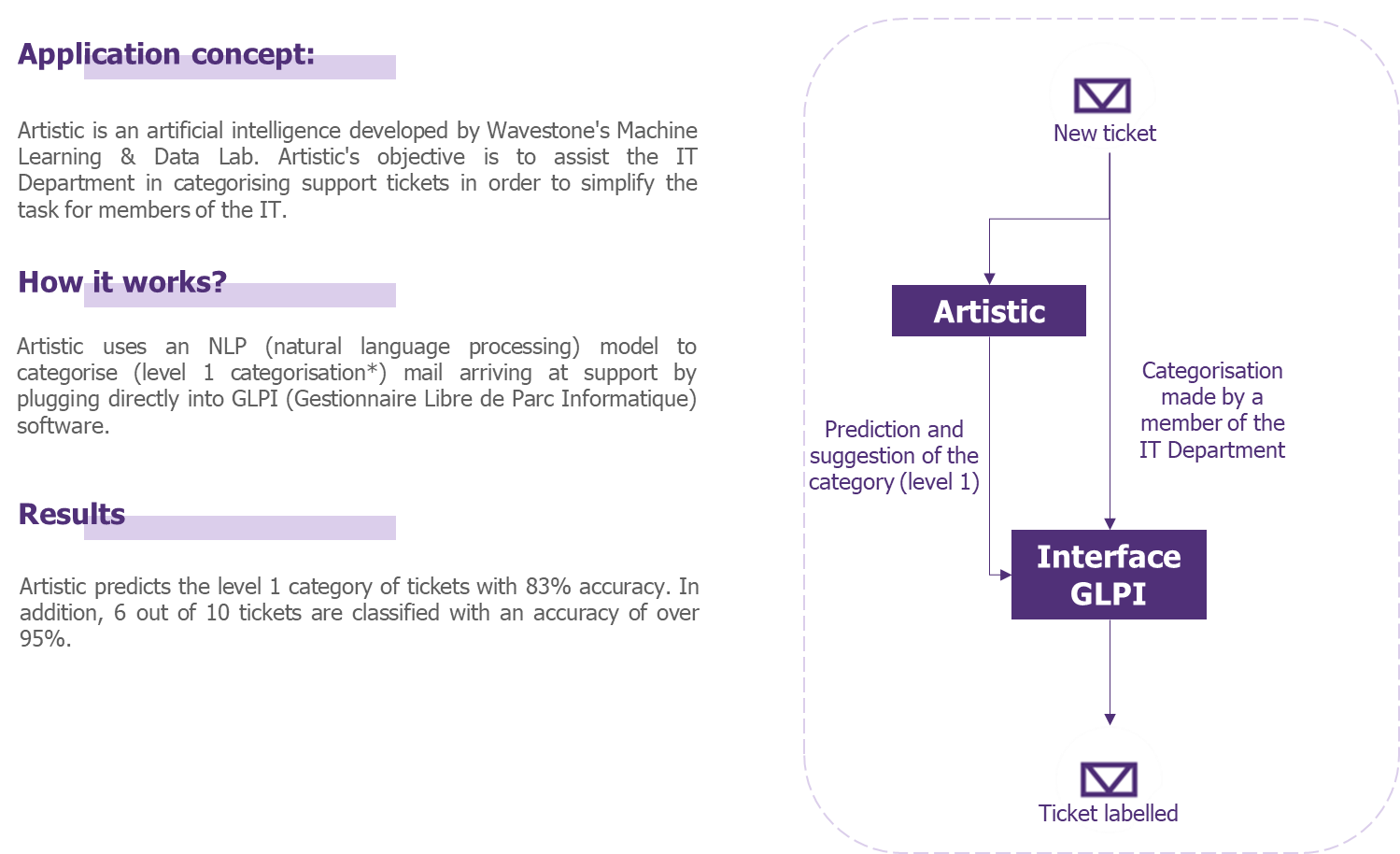

To check the feasibility of such threats, we wanted to test Evasion and Oracle threats on one of our low-impact internal applications: Artistic, a tool for classifying employee tickets for IT support.

To do this, we put ourselves in the shoes of a malicious user who, knowing that ticket processing is based on an Artificial Intelligence algorithm, would try to carry out Evasion or Oracle-type attacks.

Obviously, the impact of such attacks is very low, but our AI is a great playground for experimentation.

Application overview

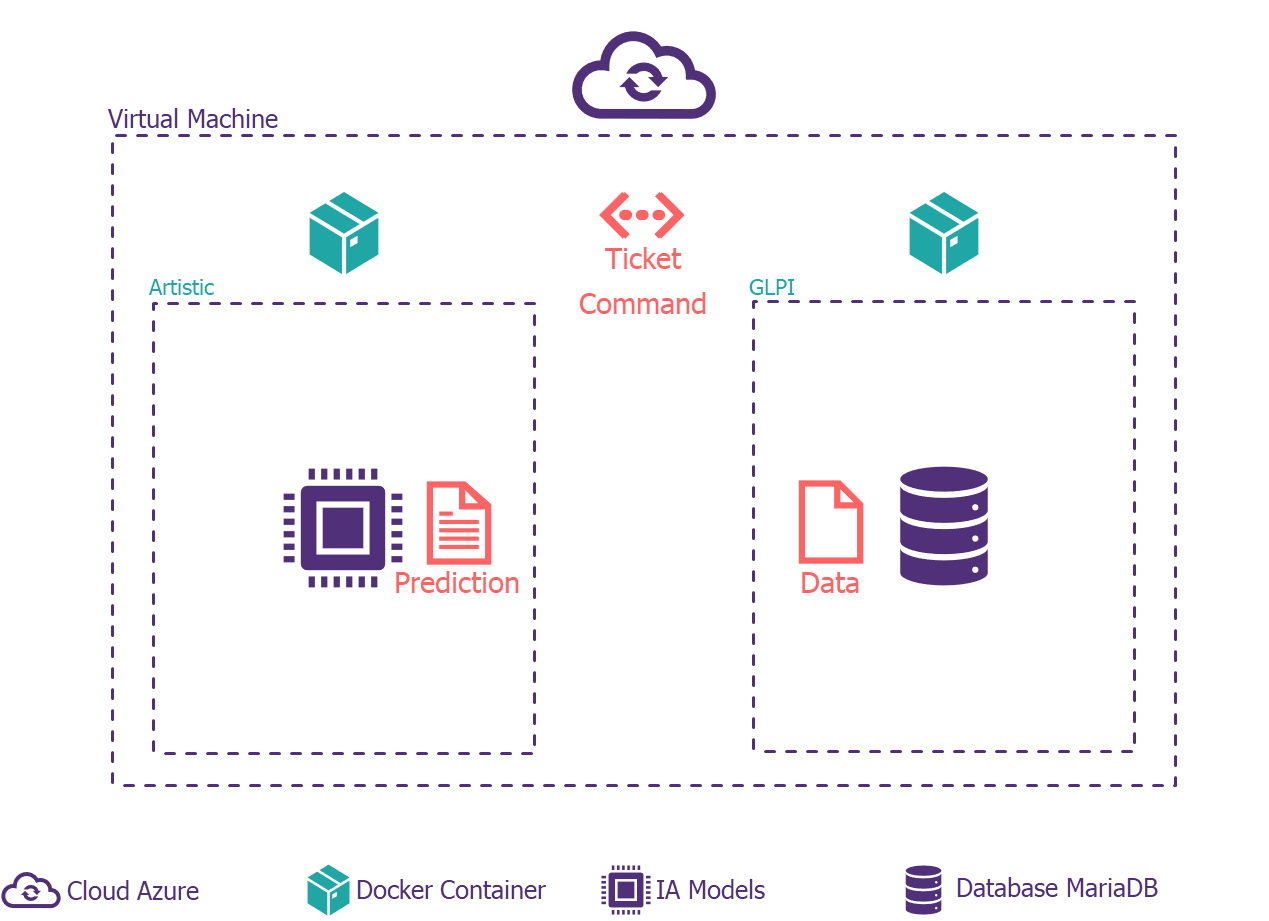

Application architecture

Evasion attack

Approach overview

An evasion attack consists of hijacking the artificial intelligence by providing it with contradictory examples (also known as “adversarial examples”) in order to create inaccurate predictions. An adversarial example is an input with intentional mistakes or changes that cause a machine learning model to make a false prediction. These mistakes or changes can easily go unnoticed by a human, such as a typo in a word, but radically alter the model’s output data.

For our example, we will try to build different contradictory examples using three techniques:

- Deleting and changing characters

- Replacing words using a dedicated technique (Embedding)

- Changing the position of words

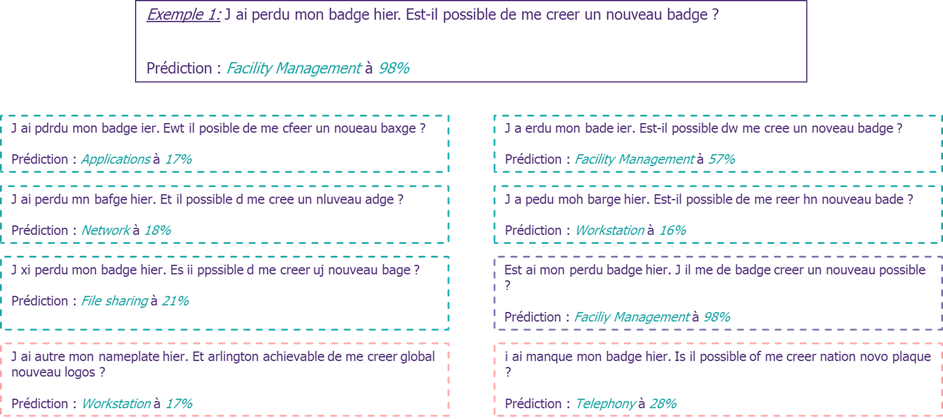

The contradictory examples in our use case are slightly modified written requests (see example 1 below) which will be categorised in the Artistic ticketing tool.

To do this, we’re going to use a dedicated tool: TextAttack. TextAttack is a Python framework for performing evasion attacks (interesting for our case), training an NLP model with contradictory examples, and performing data augmentation in the NLP domain.

Results

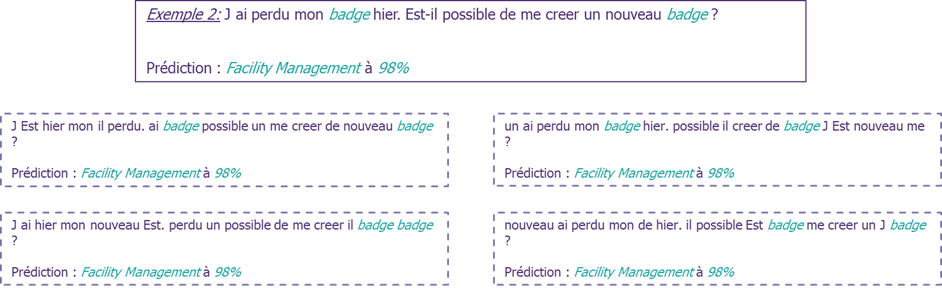

Consider a sentence correctly classified by our Artificial Intelligence with a high probability. Let’s now apply the TextAttack Framework and use it to generate contradictory examples based on our correctly classified sentence.

We have observed that sentences which are (more or less) comprehensible to a person can confuse the Artificial Intelligence to the point of misclassifying them. In addition, we can see that with a multitude of contradictory examples created, it is possible for the model to assign the same message to each of the classification categories with varying accuracy rates.

By extension, with more critical Artificial Intelligence models, these poor predictions cause a number of problems:

- Security breaches: the model in question is compromised and it becomes possible for attackers to obtain inaccurate predictions

- Reduced confidence in AI systems: such an attack reduces confidence in AI and the choice of adopting such models, calling into question the potential of this technology

However, according to ENISA, a number of measures can be implemented to be protected against this type of attack:

- Define a model that is more robust against evasion attacks. Artistic’s AI system is not particularly robust to these attacks and is very basic in its operation (as we shall see later). A different model would certainly have been more resistant to evasion attacks.

- Adversarial training during the model learning phase. This consists of adding examples of attacks to the training data so that the model improves its ability to classify “strange” data correctly.

- Implement checks on the model’s input data to ensure the ‘quality’ of the words entered.

Oracle Attack

Definition

Oracle attacks involve studying AI models and attempting to obtain information about the model by interacting with it via queries. Unlike evasion attacks, which aim to manipulate the input data of an AI model, Oracle attacks attempt to extract sensitive information about the model itself and the data it has manipulated (the type of training data used, for example).

In our use case, we are simply trying to understand how the model works. To do this, we sought to understand the model’s behaviour by analysing the input-output pairs provided by our contradictory examples.

Results

By going through several trials, the attacker may be able to detect the sensitivity of the model to changes in the input data. From the example above, we can see that the algorithm used by the application predicts the class of a message by assigning a score to each word and then determines the category. By analysing these various results, the attacker may be able to deduce the model’s vulnerabilities to evasion attacks.

By extension, on more critical Artificial Intelligences, Oracle-type attacks pose several problems:

- Infringement of intellectual property: as mentioned, the Oracle attack can allow the theft of the model architecture, hyperparameters, etc. Such information can be used to create a replica of the model.

- Attacks on the confidentiality of training data: this attack may reveal sensitive information about the training data used to train the model, which may be confidential.

A few measures can be implemented to protect against this type of attack:

- Define a model that is more robust to Oracle-type attacks. Artistic’s AI system is very basic and easy to understand.

- For AI more broadly, ensure that the model respects differential privacy. Differential privacy is an extremely strong definition of privacy that guarantees a limit to what an attacker with access to the results of the algorithm can learn about each individual record in the dataset.

Getting to grips with the subject in your organisation today

We have observed that even without precise knowledge of the parameters of an Artificial Intelligence model, it is relatively easy to carry out Evasion or Oracle-type attacks.

In our case, the impact is limited. However, the consequences of an evasion attack on an autonomous vehicle or an Oracle-type attack on a model used with health data are far more serious for individuals: physical damage in one case and invasion of privacy in the other.

A number of our customers are already starting to deploy initial measures to deal with the cyber risks created by the use of AI systems. In particular, they are developing their risk analysis methodology to take account of the threats outlined above, and most importantly they are putting in place relevant countermeasures, based on security guides such as those proposed by ENISA or NIST.

[1] An artificial intelligence system, in the AI Act legislative proposal, is defined as “software developed using one or more of the techniques and approaches listed in Annex I of the proposal and capable, for a given set of human-defined goals, of generating results such as content, predictions, recommendations, or decisions influencing the environments with which they interact.” In our paper, we consider that AI systems have been trained via Machine Learning, as is generally the case on modern use cases such as ChatGPT.

[2] https://www.enisa.europa.eu/publications/securing-machine-learning-algorithms

[3] https://csrc.nist.gov/publications/detail/white-paper/2023/03/08/adversarial-machine-learning-taxonomy-and-terminology/draft

[4] A ticket represents a sequence of words (in other words, a sentence) in which the employee expresses his or her need.