The use of artificial intelligence systems and Large Language Models (LLMs) has exploded since 2023. Businesses, cybercriminals and individuals alike are beginning to use them regularly. However, like any new technology, AI is not without risks. To illustrate these, we have simulated two realistic attacks in previous articles: Attacking an AI? A real-life example! and Language as a sword: the risk of prompt injection on AI Generative.

This article provides an overview of the threat posed by AI and the main defence mechanisms to democratize their use.

AI introduces new attack techniques, already widely exploited by cybercriminals

As with any new technology, AI introduces new vulnerabilities and risks that need to be addressed in parallel with its adoption. The attack surface is vast: a malicious actor could attack both the model itself (model theft, model reconstruction, diversion from initial use) and its data (extracting training data, modifying behaviour by adding false data, etc.).

Prompt injection is undoubtedly the most talked-about technique. It enables an attacker to perform unwanted actions on the model, such as extracting sensitive data, executing arbitrary code, or generating offensive content.

Given the growing variety of attacks on AI models, we will take a non-exhaustive look at the main categories:

Data theft (impact on confidentiality)

As soon as data is used to train Machine Learning models, it can be (partially) reused to respond to users. A poorly configured model can then be a little too verbose, unintentionally revealing sensitive information. This situation presents a risk of violation of privacy and infringement of intellectual property.

And the risk is all the greater if the models are ‘overfitted’ with specific data. Oracle attacks take place when the model is in production, and the attacker questions the model to exploit its responses. These attacks can take several forms:

- Model extraction/theft: an attacker can extract a functional copy of a private model by using it as an oracle. By repeatedly querying the Machine Learning model’s API access, the adversary can collect the model’s responses. These responses will be used as labels to form a separate model that mimics the behaviour and performance of the target model.

- Membership inference attacks: this attack aims to check whether a specific piece of data has been used during the training of an AI model. The consequences can be far-reaching, particularly for health data: imagine being able to check whether an individual has cancer or not! This method was used by the New York Times to prove that its articles were used to train ChatGPT[1].

Destabilisation and damage to reputation (impact on integrity)

The performance of a Machine Learning model depends on the reliability and quality of its training data. Poison attacks aim to compromise the training data to affect the model’s performance:

- Model skewing: the attack aims to deliberately manipulate a model during training (either during initial training, or after it has been put into production if the model continues to learn) to introduce biases and steer the model’s predictions. As a result, the biased model may favour certain groups or characteristics, or be directed towards malicious predictions.

- Backdoors: an attacker can train and distribute a corrupted model containing a backdoor. Such a model functions normally until an input containing a trigger modifies its behaviour. This trigger can be a word, a date or an image. For example, a malware classification system may let malware through if it sees a specific keyword in its name or from a specific date. Malicious code can also be executed[2]!

The attacker can also add carefully selected noise to mislead the prediction of a healthy model. This is known as an adversarial or evasion attack:

- Evasion attack (adversarial attack): the aim of this attack is to make the model generate an output not intended by the designer (making a wrong prediction or causing a malfunction in the model). This can be done by slightly modifying the input to avoid being detected as malicious input. For example:

- Ask the model to describe a white image that contains a hidden injection prompt, written white on white in the image.

- Wear a special pair of glasses to avoid being recognised by a facial recognition algorithm[3].

- Add a sticker of some kind to a “Stop” sign so that the model recognises a “45km/h limit” sign[4].

Impact on availability

In addition to data theft and the impact on image, attackers can also hamper the availability of Artificial Intelligence (AI) systems. These tactics are aimed not only at making data unavailable, but also at disrupting the regular operation of systems. One example is the poisoning attack, the impact of which is to make the model unavailable while it is retrained (which also has an economic impact due to the cost of retraining the model). Here is another example of an attack:

- Denial of service attack (DDOS) on the model: like all other applications, Machine Learning models are sensitive to denial-of-service attacks that can hamper system availability. The attack can combine a high number of requests, while sending requests that are very heavy to process. In the case of Machine Learning models, the financial consequences are greater because tokens/prompts are very expensive (for example, ChatGPT is not profitable despite its 616 million monthly users).

Two ways of securing your AI projects: adapt your existing cyber controls, and develop specific Machine Learning measures

Just like security projects, a prior risk analysis is necessary to implement the right controls, while finding an acceptable compromise between security and the functioning of the model. To do this, our traditional risk methods need to evolve to include the risks detailed above, which are not well covered by historical methods.

Following these risk analyses, security measures will need to be implemented. Wavestone has identified over 60 different measures. In this second part, we present a small selection of these measures to be implemented according to the criticality of your models.

1. Adapting cyber controls to Machine Learning models

The first line of defence corresponds to the basic application, infrastructure, and organisational measures for cybersecurity. The aim is to adapt requirements that we already know about, which are present in the various security policies, but do not necessarily apply in the same way to AI projects. We need to consider these specificities, which can sometimes be quite subtle.

The most obvious example is the creation of AI pentests. Conventional pentests involve finding a vulnerability to gain access to the information system. However, AI models can be attacked without entering the IS (like evasion and oracle attacks). RedTeaming procedures need to evolve to deal with these particularities while developing detection and incident response mechanisms to cover the new applications of AI.

Another essential example is the isolation of AI environments used throughout the lifecycle of Machine Learning models. This reduces the impact of a compromise by protecting the models, training data, and prediction results.

You also need to assess the regulations and laws with which the Machine Learning application must comply, and adhere to the latest legislation on artificial intelligence (the IA Act in Europe, for example).

And finally, a more than classic measure: awareness and training campaigns. We need to ensure that the stakeholders (project managers, developers, etc.) are trained in the risks of AI systems and that users are made aware of these risks.

2. Specific controls to protect sensitive Machine Learning models

In addition to the standard measures that need to be adapted, specific measures need to be identified and applied.

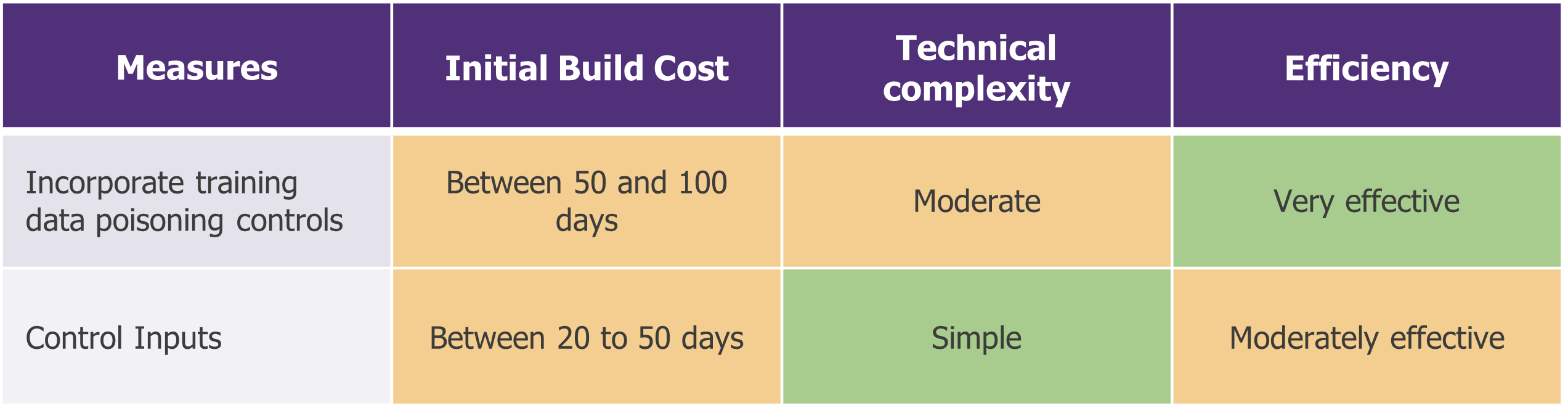

For your least critical projects, keep things simple and implement the basics

Poison control: to guard against poisoning attacks, you need to detect any “false” data that may have been injected by an attacker. This involves using exploratory statistical analysis to identify poisoned data (analysing the distribution of data and identifying absurd data, for example). This step can be included in the lifecycle of a Machine Learning model to automate downstream actions. However, human verification will always be necessary.

Input control (analysing user input): to counter prompt injection and evasion attacks, user input is analysed and filtered to block all malicious input. We can think of basic rules (blocking requests containing a specific word) as well as more specific statistical rules (format, consistency, semantic coherence, noise, etc.). However, this approach could have a negative impact on model performance, as false positives would be blocked.

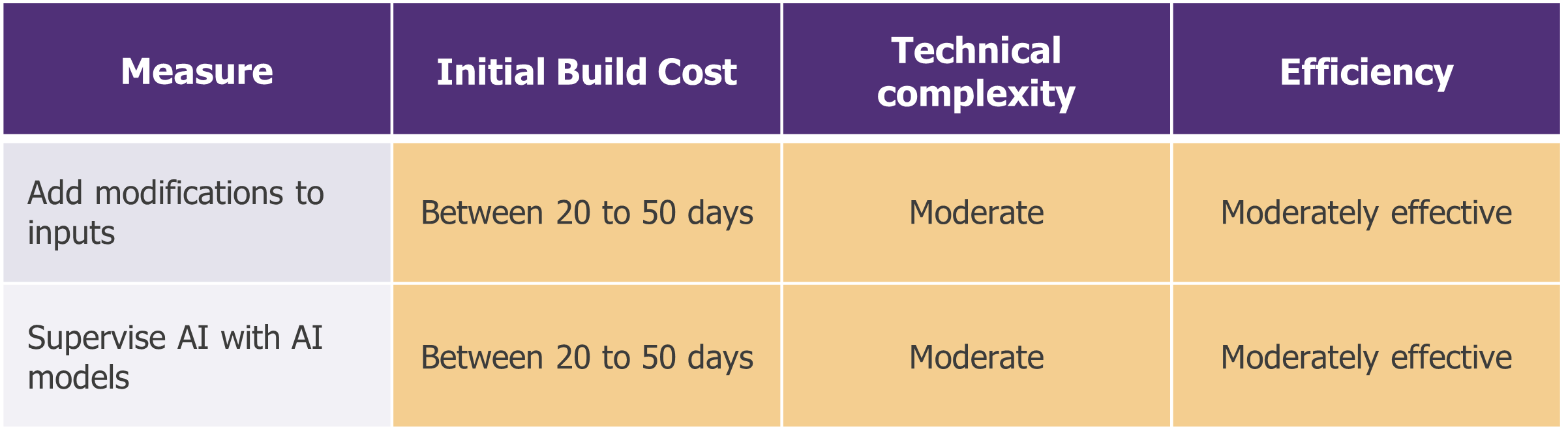

For your moderately sensitive projects, aim for a good investment/risk coverage ratio

There is a plethora of measures, and a great deal of literature on the subject. On the other hand, some measures can cover several risks at once. We think it is worth considering them first.

Transform inputs: an input transformation step is added between the user and the model. The aim is twofold:

- For example, remove or modify any malicious input by reformulating the input or truncating it. An implementation using encoders is also possible (but will be detailed in the next section).

- Another instance will be to reduce the attacker’s visibility to counter oracle attacks (which require precise knowledge of the model’s input and output) by adding random noise or reformulating the prompt.

Depending on the implementation method, impacts on model performance are to be expected.

Supervise AI with AI models: any AI model that learns after it has been put into production must be specifically supervised as part of overall incident detection and response processes. This involves both collecting the appropriate logs to carry out investigations, but also monitoring the statistical deviation of the model to spot any abnormal drift. In other words, it involves assessing changes in the quality of predictions over time. Microsoft’s Tay model launched on Twitter in 2016 is a good example of a model that has drifted.

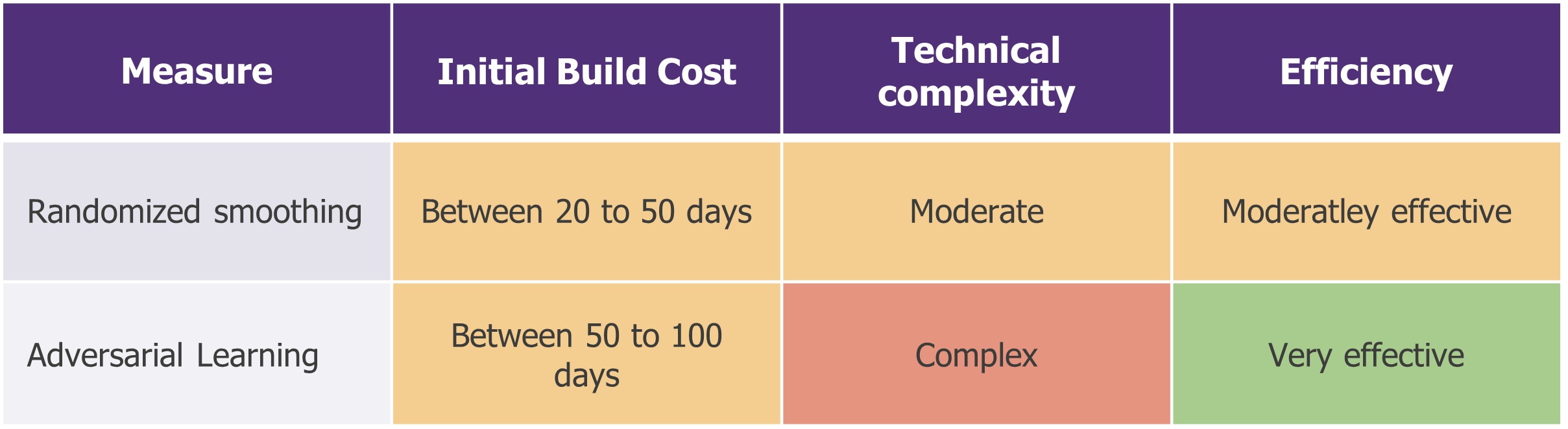

For your critical projects, go further to cover specific risks

There are measures that we believe are highly effective in covering certain risks. Of course, this involves carrying out a risk analysis beforehand. Here are two examples (among many others):

Randomized Smoothing: a training technique designed to improve the robustness of a model’s predictions. The model is trained twice: once with real training data, then a second time with the same data altered by noise. The aim is to have the same behaviour, whether noise is present in the input. This limits evasion attacks, particularly for classification algorithms.

Learning from contradictory examples: the aim is to teach the model to recognise malicious inputs to make it more robust to adversarial attacks. In practical terms, this means labelling contradictory examples (i.e. a real input that includes a small error/disturbance) as malicious data and adding them during the training phase. By confronting the model with these simulated attacks, it learns to recognise and counter malicious patterns. This is a very effective measure, but it involves a certain cost in terms of resources (longer training phase) and can have an impact on the accuracy of the model.

Versatile guardians – three sentinels of AI security

Three methods stand out for their effectiveness and their ability to mitigate several attack scenarios simultaneously: GAN (Generative Adversarial Network), filters (encoders and auto-encoders that are models of neural networks) and federated learning.

The GAN: the forger and the critic

The GAN, or Generative Adversarial Network, is an AI model training technique that works like a forger and a critic working together. The forger, called the generator, creates “copies of works of art” (such as images). The critic, called the discriminator, evaluates these works to identify the fakes from the real ones and gives advice to the forger on how to improve. The two work in tandem to produce increasingly realistic works until the critic can no longer identify the fakes from the real thing.

A GAN can help reduce the attack surface in two ways:

- With the generator (the faker) to prevent sensitive data leaks. A new fictitious training database can be generated, like the original but containing no sensitive or personal data.

- The discriminator (the critic) limits evasion or poisoning attacks by identifying malicious data. The discriminator compares a model’s inputs with its training data. If they are too different, then the input is classified as malicious. In practice, it can predict whether an input belongs to the training data by associating a likelihood scope with it.

Auto-encoders: an unsupervised learning algorithm for filtering inputs and outputs

An auto-encoder transforms an input into another dimension, changing its form but not its essence. To take a simplifying analogy, it’s as if the prompt were summarized and rewritten to remove undesirable elements. In practice, the input is compressed by a noise-removing encoder (via a first layer of the neural network), then reconstructed via a decoder (via a second layer). This model has two uses:

- If an auto-encoder is positioned upstream of the model, it will have the ability to transform the input before it is processed by the application, removing potential malicious payloads. In this way, it becomes more difficult for an attacker to introduce elements enabling an evasion attack, for example.

- We can use this same system downstream of the model to protect against oracle attacks (which aim to extract information about the data or the model by interrogating it). The output will thus be filtered, reducing the verbosity of the model, i.e. reducing the amount of information output by the model.

Federated Learning: strength in numbers

When a model is deployed on several devices, a delocalised learning method such as federated learning can be used. The principle: several models learn locally with their own data and only send their learning back to the central system. This allows several devices to collaborate without sharing their raw data. This technique makes it possible to cover a large number of cyber risks in applications based on artificial intelligence models:

- Segmentation of training databases plays a crucial role in limiting the risks of Backdoor and Model Skewing poisoning. The fact that training data is specific to each device makes it extremely difficult for an attacker to inject malicious data in a coordinated way, as he does not have access to the global set of training data. This same division limits the risks of data extraction.

- The federated learning process also limits the risks of model extraction. The learning process makes the link between training data and model behaviour extremely complex, as the model does not learn directly. This makes it difficult for an attacker to understand the link between input and output data.

Together, GAN, filters (encoders and auto-encoders) and federated learning form a good risk hedging proposition for Machine Learning projects despite the technicality of their implementation. These versatile guardians demonstrate that innovation and collaboration are the pillars of a robust defence in the dynamic artificial intelligence landscape.

To take this a step further, Wavestone has written a practical guide for ENISA on securing the deployment of machine learning, which lists the various security controls that need to be established.

In a nutshell

Artificial intelligence can be compromised by methods that are not usually encountered in our information systems. There is no such thing as zero risk: every model is vulnerable. To mitigate these new risks, additional defence mechanisms need to be implemented depending on the criticality of the project. A compromise will have to be found between security and model performance.

AI security is a very active field, from Reddit users to advanced research work on model deviation. That’s why it’s important to keep an organisational and technical watch on the subject.

[1] New York Times proved that their articles were in AI training data set

[2] Au moins une centaine de modèles d’IA malveillants seraient hébergés par la plateforme Hugging Face

[3] Sharif, M. et al. (2016). Accessorize to a crime: Real and stealthy attacks on state-of-the-art face recognition. ACM Conference on Computer and Communications Security (CCS)

[4] Eykholt, K. et al. (2018). Robust Physical-World Attacks on Deep Learning Visual Classification. CVPR. https://arxiv.org/pdf/1707.08945.pdf